Enhancing Knowledge Absorption in LLMs with Pre-Instruction-Tuning

Introduction to Pre-Instruction-Tuning

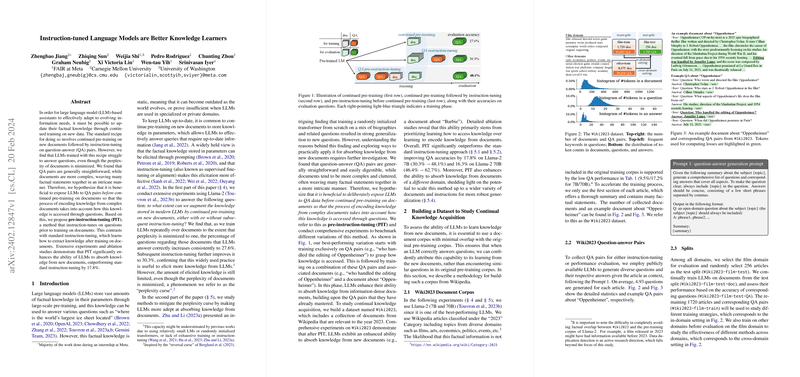

Recent advancements in the field of LLMs have demonstrated their potential in storing vast amounts of factual knowledge in their parameters. However, the static nature of this knowledge storage means it can quickly become outdated or insufficient for specialized demands. A conventional strategy to update LLMs involves continued pre-training on new documents, followed by instruction-tuning on question-answer pairs. Despite this approach's popularity, our investigations reveal its limitations in effectively updating LLMs' knowledge bases. This paper introduces Pre-Instruction-Tuning (PIT), a novel strategy that reverses the conventional sequence by instruction-tuning LLMs on question-answer pairs prior to document pre-training. Our experiments, conducted using the Llama-2 models, showcase PIT's superiority in enhancing LLMs' knowledge absorption capabilities, with significant improvements over the standard instruction-tuning process.

Methodology and Experiments

The initial phase of our research involved evaluating the extent to which LLMs could enhance their knowledge base through the standard practice of document pre-training followed by instruction-tuning. Utilizing the Llama-2 models for extensive experimentation on the specially curated Wiki2023 dataset, which comprises documents and associated question-answer pairs from Wikipedia articles categorized under the year 2023, we observed a phenomenon we term the "perplexity curse". This denotes the limited increase in accuracy for answered questions despite minimized document perplexity, highlighting the inefficacy of the standard approach in substantially enhancing LLMs' knowledge absorption capability.

To address these limitations, we proposed PIT, hypothesizing its potential in orienting the LLMs towards a more effective knowledge acquisition pathway by exposing them to the format of accessing knowledge (through questions) before learning to encode new information from documents. The methodology involved experimenting with various training sequences, starting with questions before associated documents and vice versa, to ascertain the optimal learning path. Our findings indicate a clear advantage in starting the training sequence with question-answer pairs, thus solidifying the foundation of the PIT approach.

Results and Implications

Our comprehensive evaluation showcases that PIT significantly surpasses the standard instruction-tuning model in enhancing LLMs' ability to absorb and retrieve knowledge from new documents. Specifically, models trained with PIT demonstrated a 17.8% improvement in QA accuracies over their counterparts trained with standard instruction-tuning processes. Furthermore, the PIT approach displayed promising generalization capabilities across different document domains, indicating its potential applicability in a wide range of knowledge absorption and retrieval tasks.

Future Prospects and Limitations

The encouraging outcomes from applying PIT highlight its potential as a pivotal methodology in the advancement of continual learning and knowledge updating in LLMs. Future explorations could extend beyond Wikipedia-based datasets to encompass varied data sources, thus broadening the effectiveness and applicability of the PIT approach in dynamically updating LLMs across diverse information domains. However, it's important to acknowledge the current limitations, including the focus on Wikipedia articles for dataset creation and the specific aim of enhancing factual knowledge retrieval, which may not directly translate to improvements in skills such as reasoning or comprehension.

Acknowledgements and Concluding Remarks

The contribution of various researchers and the feedback received throughout the investigation have been invaluable in shaping this paper. In conclusion, Pre-Instruction-Tuning emerges as a compelling strategy for enhancing the knowledge learning capabilities of LLMs, presenting a significant step forward in the field of generative AI and model training methodologies.