Comprehensive Evaluation of LLMs in Finance Using the FinBen Benchmark

Introduction to FinBen

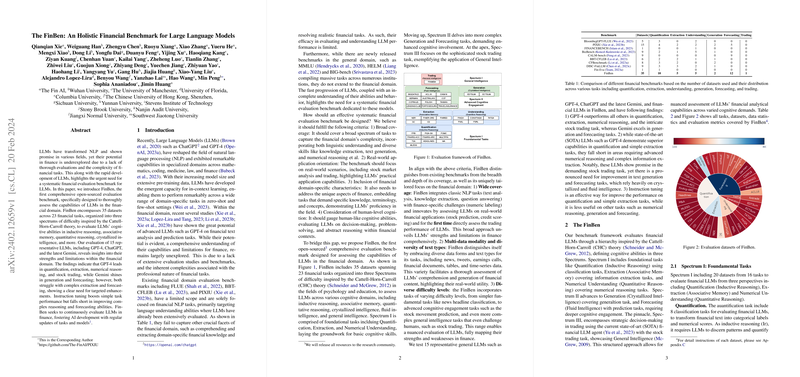

The finance industry stands on the cusp of a transformation, courtesy of advancements in Language Large Models (LLMs) that promise to enhance financial analytics, forecasting, and decision-making. Despite notable strides in the application of LLMs across various domains, their potential in finance has been relatively uncharted due to the intricate nature of financial tasks and a paucity of comprehensive evaluation frameworks. To address this gap, the presented paper introduces FinBen, a pioneering benchmark designed to systematically assess LLMs' proficiency in the financial domain. FinBen's architecture, inspired by the Cattell-Horn-Carroll (CHC) theory, encompasses a wide array of financial tasks categorized under three spectrums of difficulty. This enables a holistic evaluation of LLMs, shedding light on their capabilities and limitations within financial applications.

Benchmark Design and Evaluation Framework

FinBen enriches the landscape of financial benchmarks by offering a robust, open-sourced evaluation tool tailored to the financial sector's unique requirements. It features 35 datasets spanning 23 financial tasks, bridging crucial gaps observed in existing benchmarks.

Spectrum I: Foundational Tasks

- Quantification, Extraction, and Numerical Understanding tasks form the foundational spectrum, aiming to gauge basic cognitive skills such as inductive reasoning and associative memory.

- A variety of datasets, including FPB, FiQA-SA, and TSA, facilitate the evaluation of sentiment analysis, news headline classification, and more.

Spectrum II: Advanced Cognitive Engagement

- Generation and Forecasting tasks, demanding higher cognitive skills like crystallized and fluid intelligence, constitute the second tier.

- Datasets like ECTSUM and BigData22 challenge LLMs to produce coherent text outputs and predict future market behaviors, respectively.

Spectrum III: General Intelligence

- At the apex, the stock trading task represents the ultimate test of an LLM's general intelligence, embodying strategic decision-making and real-world application capabilities.

Key Findings and Insights

The evaluation of 15 representative LLMs, including GPT-4, ChatGPT, and Gemini, via the FinBen benchmark offers intriguing insights:

- GPT-4 excels in foundational tasks such as quantification and numerical understanding but exhibits areas for improvement in more complex extraction tasks.

- Gemini demonstrates remarkable ability in generation and forecasting tasks, hinting at its advanced cognitive engagement capabilities.

- The efficacy of instruction tuning is underscored, with significant performance boosts observed in simpler tasks.

These findings underscore the nuanced capabilities and potential improvement areas for LLMs within the financial domain, highlighting the imperative for continuous development and refinement.

Implications and Future Directions

The creation and deployment of the FinBen benchmark represent a significant stride towards understanding and harnessing the capabilities of LLMs in finance. By providing a comprehensive evaluation tool, FinBen facilitates the identification of strengths, weaknesses, and development opportunities for LLMs in financial applications.

Looking ahead, the continuous expansion of FinBen is envisioned to include additional languages and a wider array of financial tasks. This endeavor aims to not only extend the benchmark's utility and applicability but also to stimulate further advancements in the development of financial LLMs. The journey towards fully realizing LLMs' potential in finance is complex and challenging, yet FinBen lays a foundational stone, guiding the path towards more intelligent, efficient, and robust financial analytical tools and methodologies.

Concluding Remarks

In a rapidly evolving landscape where finance intersects with cutting-edge AI technologies, benchmarks like FinBen play a pivotal role in advancing our understanding and capabilities. This comprehensive framework not only champions the assessment of LLMs in financial contexts but also paves the way for future innovations, fostering a symbiotic growth between finance and AI. As we continue to explore and expand the frontiers of AI in finance, benchmarks such as FinBen will remain indispensable in our quest to unlock the full potential of LLMs, driving towards more informed, efficient, and innovative financial ecosystems.