Enhancing Robustness to Instruction Variations in LLMs with Contrastive Instruction Tuning (Coin)

Introduction

LLMs have made significant strides in understanding and executing diverse human instructions through instruction tuning. However, their application in real-world scenarios is hindered by their sensitivity to slight variations in instruction phrasing or style, often leading to inconsistent outputs. To address this challenge, we present a novel approach, Contrastive Instruction Tuning (Coin), which effectively improves LLMs' performance and robustness across different instruction variations without sacrificing accuracy or increasing inconsistency.

The Core of Coin

Coin introduces a method to enhance the consistency and robustness of LLMs to variations in textual instructions. The approach leverages contrastive learning, focusing on two main aspects:

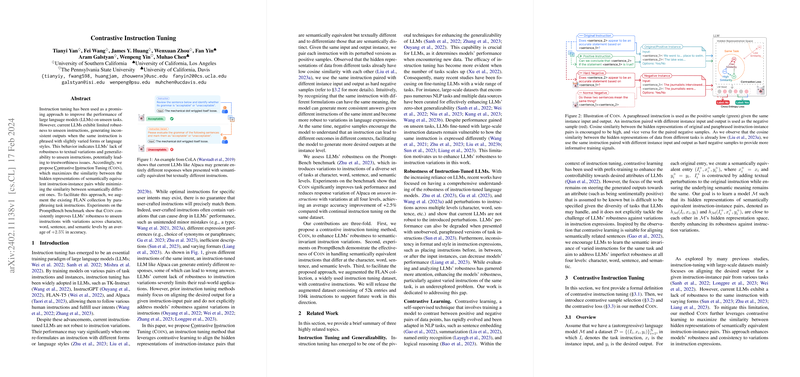

- Positive Sample Augmentation: By paraphrasing task instructions, Coin creates positive instruction-instance pairs that, although textually different, are semantically equivalent.

- Hard Negative Sampling: Coin identifies hard negative samples by pairing the same instruction with different instance inputs and outputs, providing a stronger learning signal that encourages the model to discern finer semantic differences.

Key Contributions and Findings

The implementation and evaluation of Coin reveal several critical insights and advancements in the field of instruction-tuned LLMs. The contributions and findings include:

- Improved Robustness: Coin consistently enhances LLMs' robustness against unseen instructions with variations at multiple levels, demonstrating an average accuracy improvement of +2.5% on the PromptBench benchmark.

- Augmented Instruction Dataset: The FLAN collection is expanded with paraphrased instructions, contributing 52k entries and 104k instructions to facilitate future research in instruction robustness.

- Efficacy Across Tasks: Experimental results show significant improvements, especially in tasks like paraphrase identification and grammar correctness, illustrating Coin's ability to enhance model sensitivity to semantic nuances.

Empirical Insights

Through extensive experiments, several empirical insights have emerged:

- Alignment of Hidden Representations: Visualization of hidden representations using UMAP reveals Coin's effectiveness in clustering semantically equivalent instructions closer together, reducing the impact of textual variation.

- Significant Gains on Specific Tasks: Coin notably improves performance on tasks that benefit from refined semantic understanding, such as paraphrase identification and grammar correctness, by +5.4% and +6.3%, respectively.

- Optimized Loss Weighting: The paper finds an optimal balance for the contrastive loss weight, λ=1,000, ensuring the contrastive loss neither dominates nor diminishes in the total loss landscape, optimizing model performance and robustness.

Future Directions and Limitations

While Coin represents a significant step forward, it opens up several avenues for future research and acknowledges certain limitations. For instance, Coin's reliance on paraphrasing for positive sample creation could be expanded to include a broader array of semantic-invariant augmentation methods. Additionally, extending the evaluation to more instruction-tuned models, datasets, and downstream tasks could further validate Coin's effectiveness. Finally, exploring alternative evaluation metrics and conditions could offer a more comprehensive view of Coin's impact.

Conclusion

The introduction of Contrastive Instruction Tuning (Coin) marks a notable advancement in the quest to enhance LLMs' robustness and reliability in interpreting and executing varied human instructions. By leveraging contrastive learning, Coin not only achieves significant improvements in model performance across a range of tasks but also contributes valuable resources and insights to the field. As LLMs continue to play a pivotal role in AI-based applications, methods like Coin, which enhance model robustness and consistency, will be crucial in realizing their full potential in real-world scenarios.