Speculative Streaming: Enhancing LLM Inference Efficiency through Single-Model Speculative Decoding

Introduction

The computational demands of LLMs pose significant challenges for deployment, particularly in scenarios with stringent latency requirements. Traditional speculative decoding techniques, which use a two-model system comprising a draft and a target model to predict future tokens, offer a way to accelerate LLM inference but at the cost of increased complexity and resource requirements. This paper introduces Speculative Streaming, a novel single-model approach to speculative decoding that embeds the drafting and verification process within the target model itself, thereby removing the need for an auxiliary model and significantly reducing the parameter overhead.

Motivation

LLM inference is often memory-bound, limiting the effectiveness of conventional speculative decoding approaches that require separate draft and target models. These approaches not only add complexity to the deployment but also are not feasible for resource-constrained devices due to the significant memory overhead. The motivation behind Speculative Streaming is to eliminate these limitations by integrating speculative decoding capabilities directly into the target model, thus streamlining the decoding process and making it more resource-efficient.

Methodology

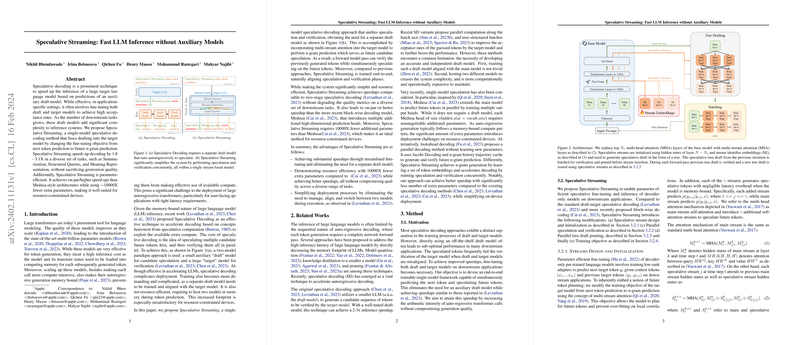

Speculative Streaming alters the fine-tuning objective of LLMs from next-token prediction to future n-gram prediction, utilizing a concept known as multi-stream attention. This approach allows for simultaneous token verification and future token speculation within a single model pass. Notable adjustments and innovations include:

- Streams Design and Initialization: Utilizes multiple speculative streams that are initialized at a higher-level layer of the transformer model, each predicting future tokens based on different lookahead positions.

- Parallel Speculation and Verification: By generating a speculative tree draft and verifying its tokens simultaneously, the method can significantly accelerate the decoding process.

- Tree Draft Pruning: A novel layer is introduced to prune less probable paths from the speculative tree draft, thereby reducing computation overhead without sacrificing prediction quality.

Experimental Results

The experiments demonstrate Speculative Streaming's effectiveness across various tasks, including Summarization, Structured Queries, and Meaning Representation. When compared to both the standard speculative decoding and recent Medusa-style architectures, Speculative Streaming achieves superior decoding speed-ups (1.8 - 3.1X) without compromising on the generation quality. Furthermore, it dramatically reduces parameter overhead, requiring approximately 10000X fewer additional parameters than its Medusa counterpart, thus making it highly suitable for deployment on resource-constrained devices.

Implications and Future Directions

Speculative Streaming represents a significant step forward in simplifying LLM inference deployment by integrating the speculation and verification process into a single, efficient model. This not only enhances the practicality of LLM deployment in latency-sensitive applications but also opens up new possibilities for further optimization and efficiency improvements in generative AI models. Future research could explore the integration of Speculative Streaming with other model optimization techniques, such as model quantization or pruning, to achieve even greater efficiency gains.

Conclusion

The introduction of Speculative Streaming offers a promising solution to the challenges of deploying large, autoregressive transformer models, particularly in latency-sensitive environments. By combining the processes of speculation and verification within a single model and significantly reducing parameter overhead, this method paves the way for more efficient and practical applications of LLMs across a wide range of domains.