ChemLLM: Bridging Chemistry and AI through LLMing

Introduction to ChemLLM

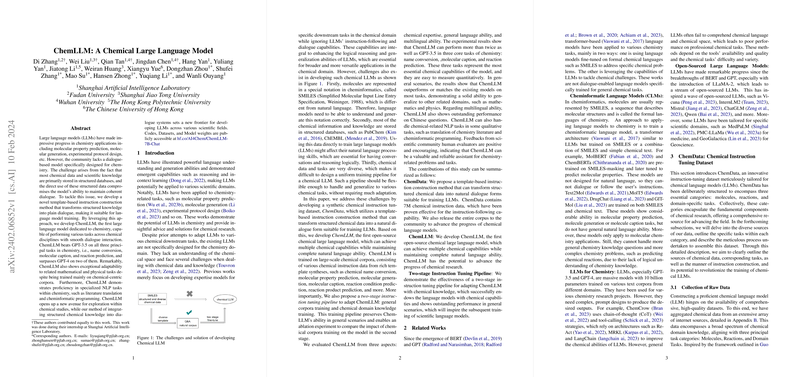

The exponential increase in the capabilities of LLMs has paved the way for their application across a broad spectrum of scientific disciplines. Of particular interest is the field of chemistry, where LLMs can offer significant benefits, from predicting molecular properties to assisting in experimental design. Despite the potential, the adaptation of LLMs for chemistry has encountered specific hurdles, primarily due to the unique language and structured data formats inherent to the field. "ChemLLM: A Chemical LLM" addresses these challenges by introducing a dialogue-based model tailored for the chemical domain. This model distinguishes itself by transforming structured scientific knowledge into a dialogue format, thereby enabling effective training of LLMs for chemistry applications.

ChemLLM and Its Unique Approach

ChemLLM represents an innovative leap, leveraging a method that translates structured chemical data into a dialogue-based format suitable for LLM training. This approach ensures the preservation of the rich information contained within these data sets while making them accessible for LLM ingestion. Such a strategy is essential for maintaining coherent and meaningful interactions within the model, focusing on the nuanced needs of chemical research. To validate its effectiveness, ChemLLM was rigorously compared against other models, including GPT-3.5, across a series of core chemistry tasks including name conversion, molecular caption, and reaction prediction, where it demonstrated superior performance.

Experimental Results and Implications

ChemLLM’s proficiency extends beyond its primary domain, showing a high degree of adaptability to related mathematical and physical tasks. This adaptability underscores the model's sophisticated comprehension and generation abilities tied to the general language of sciences, which are crucial for interdisciplinary research. Furthermore, ChemLLM’s capacity for engaging in specialized NLP tasks within chemistry, such as literature translation and cheminformatic programming, reveals its potential as a comprehensive tool for scientific inquiry and communication. When assessed through the framework of ethics-related scenarios, ChemLLM displayed an alignment with human values, suggesting its readiness for responsible use.

Methodological Innovations and Contributions

The creation of ChemLLM was underpinned by a series of methodological innovations. The two-stage instruction tuning pipeline, integrating open-domain training with domain-specific knowledge, played a pivotal role in tuning the model for chemical language processing. This was coupled with the development of ChemData, a curated chemical instruction-tuning dataset, which transformed structured chemical information into natural dialogue forms, effectively tackling the challenge of training LLMs on scientific databases.

Future Directions

The success of ChemLLM opens numerous avenues for exploration. One immediate area of interest is the expansion of the model to encompass a broader range of scientific disciplines, which may involve adapting the template-based instruction construction method for other specialized domains. Moreover, there is a clear pathway toward integrating ChemLLM with experimental platforms, facilitating real-time guidance and decision-making in laboratory settings. Such integration could significantly accelerate the experimental workflow and potentially uncover novel insights through the model's predictive capabilities.

Conclusion

"ChemLLM: A Chemical LLM" represents a significant advancement in the application of LLMs to the field of chemistry. By meticulously addressing the challenges associated with chemical data and leveraging strategic innovations in model training, ChemLLM sets a new standard for the integration of AI in scientific research. With its proven performance and versatility, ChemLLM not only enhances our computational approach to chemical studies but also serves as a blueprint for future endeavors in applying LLMs across the diverse landscape of scientific exploration.