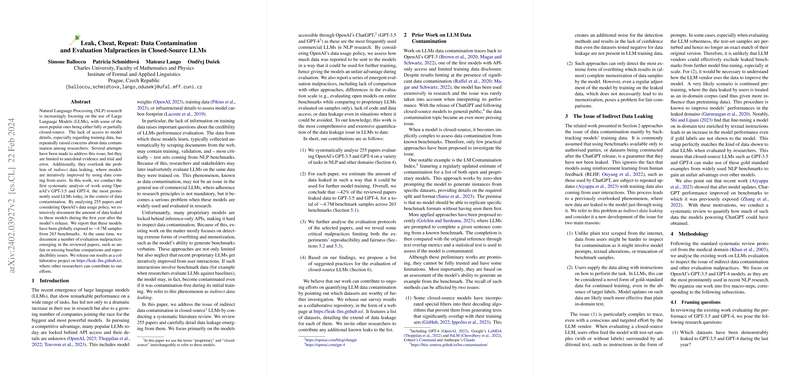

An Analysis of Data Contamination and Evaluation Practices in Closed-Source LLMs

The paper "Leak, Cheat, Repeat: Data Contamination and Evaluation Malpractices in Closed-Source LLMs" by Simone Balloccu et al. provides a meticulous investigation into the challenges associated with data contamination and evaluation malpractices involving closed-source LLMs, specifically OpenAI's GPT-3.5 and GPT-4. The paper underscores the opacity of proprietary models, where details about model training data are inaccessible, raising significant concerns about data contamination—a scenario where models inadvertently learn and are evaluated on benchmark datasets present in their training corpus.

The authors systematically analyze 255 papers engaging with OpenAI’s LLMs, revealing that ~42% of these studies have potentially leaked over 4.7 million samples from 263 distinct benchmarks to the models through the use of the web interface. This exposure stems from OpenAI’s policy of using interaction data from its web interface for model updates, a policy which does not apply to data accessed via API. The research provides compelling evidence that a substantial portion of benchmark data may have been used to inadvertently enhance the models’ subsequent performance, leading to inflated or misleading evaluations of their capabilities.

Key Findings and Contributions

- Data Contamination Extent: The paper documents significant data leakage instances, with a predominant number of datasets being almost entirely exposed. These leaks encompass popular tasks such as natural language inference, question answering, and natural language generation, signifying a widespread vulnerability across various NLP domains.

- Evaluation Malpractices: The paper identifies recurrent malpractices in model evaluation. Without uniformity in experimental conditions, many papers missed appropriate baselines and reproducibility protocols. A disturbing number of evaluations were conducted on disparate subsets of datasets, potentially skewing performance comparisons against open models or other baselines.

- Assessment Protocols: By dissecting methodological inconsistencies, the paper suggests best practices that emphasize using APIs when engaging with closed-source LLMs, ensuring transparent and reproducible evaluation frameworks, and encouraging a fair comparison by re-evaluating all models on identical subsets.

- Implications and Future Prospects: The paper calls for concerted efforts to enhance LLM evaluation integrity by avoiding proprietary models when possible, making evaluations publicly verifiable, and recognizing data leakage scenarios. The paper posits that acknowledging and addressing these challenges is crucial to sustaining scientific rigor and advancing NLP research.

- Collaborative Platform: As a proactive step, the authors have released their findings and tools as an online repository, encouraging communal contribution to identify further instances of data contamination and refine best practices.

Implications on AI Development and Evaluation

The findings elucidate critical dimensions of AI reliability and ethics—highlighting how proprietary constraints obscure meaningful assessments of LLM capabilities. The delineated best practices, if adopted widely, could bolster operational transparency and comparability, eventually fostering more resilient and durable AI systems.

Looking forward, the paper suggests further research to quantitatively measure the impact of leaked test data on model performance and extend their analysis to other closed-source models beyond OpenAI's ecosystem. These developments will be vital in understanding and shaping the continual evolution of AI models in response to ever-growing computational and ethical challenges.

This comprehensive examination amplifies the discourse on AI transparency, responsibility, and methodological consistency—cornerstones needed for the sustained credibility and progression of AI technologies.