Overview of LitLLM Toolkit

The LitLLM toolkit presents a significant advancement in the field of scientific literature review by addressing some of the prominent issues encountered with the use of LLMs in this context. Specifically, it combats the challenges of factual inaccuracies and oversight of recent studies, which were not part of the LLMs' original training data. The proposed toolkit leverages Retrieval Augmented Generation (RAG) to ensure that the literature reviews produced are based on factual content, thus reducing the instances of hallucinations commonly observed in LLM-generated texts.

Enhancements in Literature Review Generation

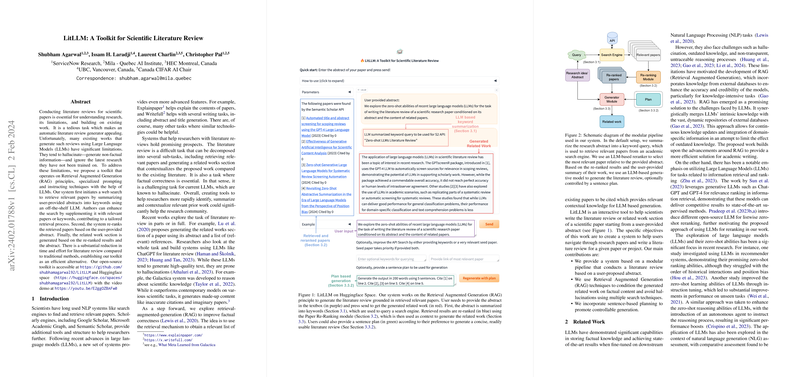

LitLLM operates through a multi-step modular pipeline that enables the automatic generation of related work sections for scientific papers. The process begins by summarizing user-provided abstracts into keywords, subsequently used for a web search to retrieve relevant papers. The system's re-ranking capability further sharpens the focus by selecting documents that closely align with the user's abstract. Based on these refined search results, a coherent related work section is generated. The open-source availability of the toolkit on GitHub and Huggingface Space, coupled with an instructional video, underscores the commitment to accessibility and user support.

Pipeline Design and Related Work

Diving into the mechanics of LitLLM, the paper retrieval module uses Semantic Scholar API to fetch documents, providing options for users to input additional keywords or reference papers to guide the search, thus enhancing precision and relevance. The re-ranking module is tasked with ordering the retrieved documents based on their relevance to the user-provided abstract. As for the final stage, the summary generation module utilizes LLM-based strategies, particularly zero-shot and plan-based generation, to construct the literature review. Plan-based generation is especially noteworthy as it appeals to authorial preference, providing customizable controls over the structure and content of the generated review.

Concluding Thoughts

The developed toolkit represents a stride forward in the application of LLMs for academic writing and research. The complexity of generating factually accurate and up-to-date related work sections in academic papers is adeptly managed by LitLLM, making it a potential mainstay in researcher toolkits. Nonetheless, the authors advocate for responsible usage, suggesting that outputs be meticulously reviewed to curb any residual factual inaccuracies. As for future directions, expansion to encompass full-text analysis and the integration of multiple academic search APIs are identified as logical next steps in the evolution of LitLLM. This progression aims at crafting more nuanced and contextually rich literature reviews, further enhancing the toolkit's capability as a research assistant.