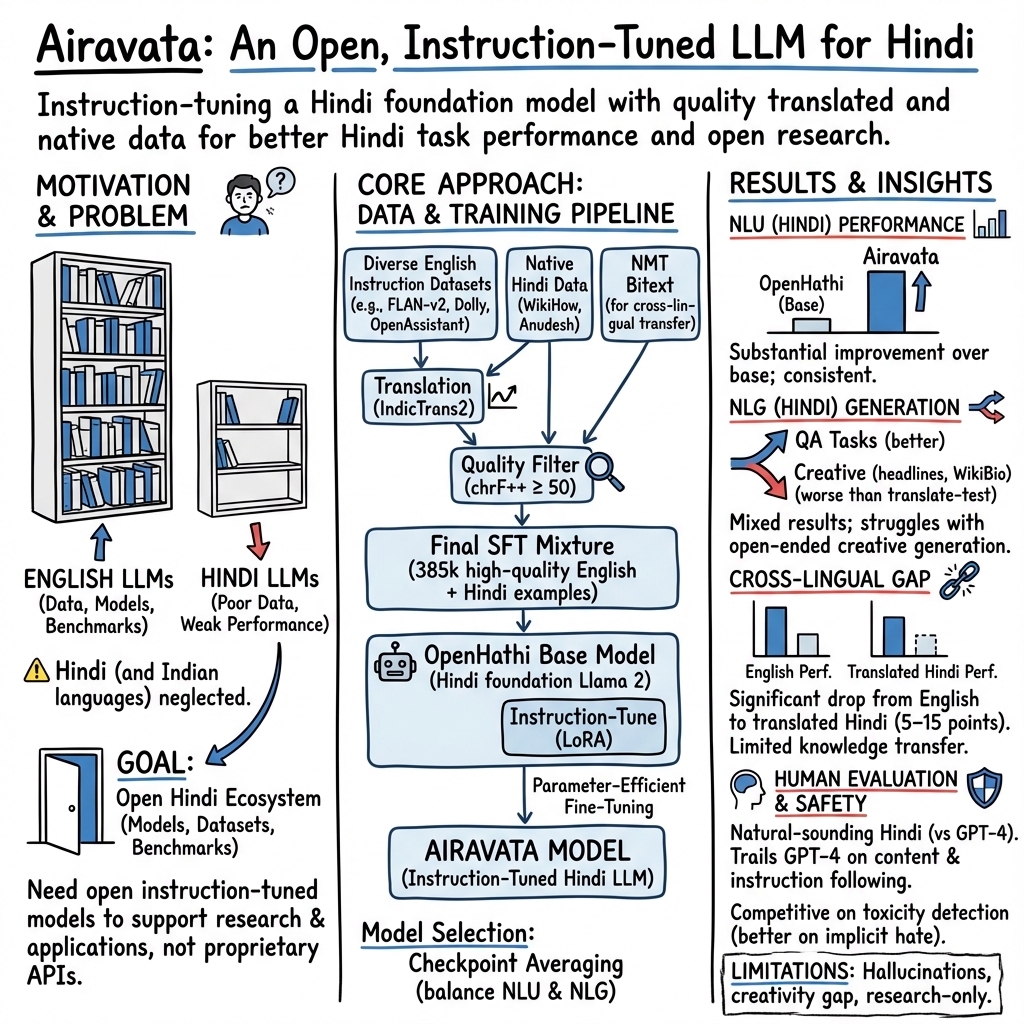

- The paper introduces Airavata, a Hindi instruction-tuned LLM that enhances performance on NLU tasks via tailored dataset creation and LoRA fine-tuning.

- It details a robust methodology that translates high-quality English datasets into Hindi and applies parameter-efficient supervised fine-tuning.

- Results show improved Hindi language understanding compared to previous models, suggesting promising approaches for better cross-lingual alignment and NLG performance.

Insightful Overview of Airavata: A Hindi Instruction-Tuned LLM

This paper presents Airavata, a novel instruction-tuned LLM specifically developed for the Hindi language. Originating from the Nilekani Centre at AI4Bharat with multiple collaborators, this work recognizes the limitations of existing LLMs in supporting Indian languages and responds by enhancing the capabilities of these models for Hindi through instruction tuning.

Motivation and Background

The dominance of English in LLM development has left Indian languages underrepresented, leading to poor performance of models like GPT-4 and ChatGPT in non-English languages. Indian languages suffer from setbacks such as inadequate data representation and inefficient tokenization, which necessitate tailored models and datasets. Consequently, this research aims to bridge the performance gap by aligning Hindi LLMs through instruction tuning.

Methodology

Airavata builds on the OpenHathi model by instruction-tuning it with a diverse set of Hindi language datasets. The approach involves:

- Dataset Creation: A significant challenge was the creation of instruction tuning datasets in Hindi, which were addressed by translating high-quality English datasets with IndicTrans2, an advanced MT model. The resulting dataset comprised approximately 385,000 examples after refining with chrF++ score checks for translation quality.

- Supervised Fine-tuning: Utilizing LoRA for parameter-efficient tuning, the model parameters were adjusted with carefully curated datasets. The fine-tuning process was scrutinized through an ablation study contrasting full fine-tuning against LoRA, with LoRA emerging as the more efficient and generalizable approach.

- Performance Evaluation: The model was evaluated on a range of tasks, including NLU and NLG benchmarks, using machine-translated English benchmarks to Hindi as proxies due to resource constraints. This provided insights into the model's capabilities and outlined areas needing further innovation, particularly in cross-lingual alignment.

Results and Implications

Airavata outperformed its precursor, the OpenHathi model, across a variety of NLU tasks, indicating successful task alignment due to instruction tuning. However, mixed results were observed in NLG tasks, underscoring the requirement for continued enhancement, particularly in creative language generation. The performance gap between Hindi and English variants of tasks highlights the necessity for improved cross-lingual transfer mechanisms.

Furthermore, human evaluations underscored Airavata’s competitive edge over models like BactrianX-llama-7B in producing more natural Hindi output, though it still trails behind GPT-4 in content quality and instruction adherence. This evaluation suggests that there is a scope to expand the instruction dataset for broader performance enhancements.

Future Directions

The potential future directions for this line of research involve:

- Expanding Language Support: Extending the approach to other Indic languages by developing large, diverse pre-training datasets and refining existing models.

- Cross-lingual Enhancements: Improving English-to-Hindi alignment to leverage English's broader knowledge base for better knowledge transfer in non-English contexts.

- Model Robustness: Addressing model limitations such as hallucinations, and refining the understanding of cultural nuances to reduce content biases and inaccuracies.

By publicizing both the datasets and the model, Airavata sets the stage for further research advancements in Indian language processing, promoting an ecosystem where open-source Hindi LLMs can flourish. This work represents a significant stride in addressing linguistic inequities in AI, paving the way for more inclusive and representative models.