Introduction

A newly proposed adaptive watermarking strategy for LLMs tackles key challenges associated with AI-generated text watermarking. Current watermarking methods face obstacles in ensuring strong security, robustness, and high-quality text while allowing watermark detection without prior knowledge of the model or prompt. The developed strategy focuses on adaptively applying watermarking to token distributions with high entropy and preserving low entropy distributions.

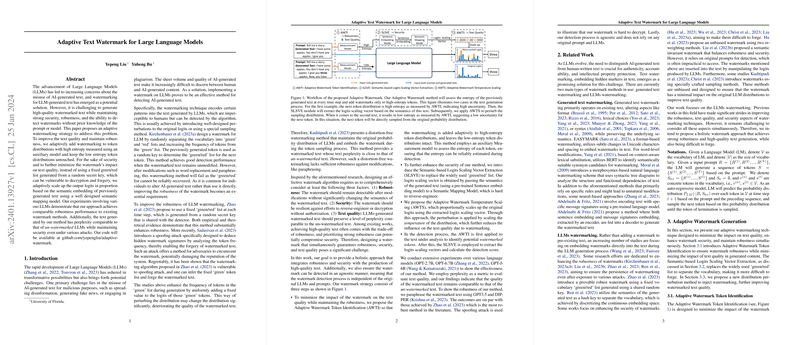

Methodology

Central to the method's robustness and text quality is the Adaptive Watermark Token Identification (AWTI) which selectively applies watermarking based on entropy analysis using an auxiliary model. To bolster security, a Semantic-based Logits Scaling Vector Extraction (SLSVE) replaces the traditional fixed 'green/red' list with semantically-derived logits scaling vectors. This technique is more challenging to reverse-engineer. Additionally, Adaptive Watermark Temperature Scaling (AWTS) adaptively scales output logits, reducing the impact on text quality.

Experiments

The approach underwent extensive testing against various LLMs, including OPT-2.7B, OPT-6.7B, and GPT-J-6B. Metrics such as perplexity, alongside empirical robustness validation against paraphrase attacks, were employed. The perplexity of texts with the adaptive watermark was comparable to un-watermarked counterparts, while maintaining security. The adaptive method outperformed existing methods under paraphrase attacks, showcasing the robustness of its watermarking.

Conclusion

The adaptive watermarking technique presented addresses three critical aspects of LLM watermarking: robustness, security, and text quality. Through entropy-based adaptive watermarking, semantic logits scaling, and temperature scaling, the method ensures strong performance even under attacks that alter watermarked text. Furthermore, the detection process leverages AWTI combined with SLSVE, making it independent of the LLMs and the original prompts. This provides a holistic solution to the challenges facing LLM watermarking today.