Introduction

Vision LLMs (VLMs) have advanced significantly across various tasks including image captioning, visual question answering (VQA), and more. However, state-of-the-art VLMs, such as GPT-4V, exhibit deficiencies in spatial reasoning - understanding the position of objects in 3D space and spatial relationships between them. Proficiency in spatial reasoning extends VLMs’ utility in domains such as robotics or augmented reality (AR). This paper posits that the spatial reasoning limitations of current VLMs are not due to architecture constraints but stem from the lack of 3D spatial knowledge in their training data.

Methodology

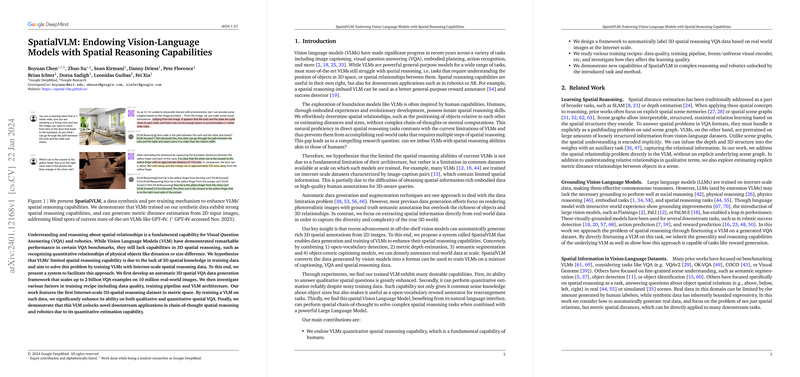

To address the gap in 3D spatial reasoning, the researchers present SpatialVLM, a system that generates a substantial dataset for VLM training, leveraging internet-scale data. The paradigm inculcates VLMs with the capability to conduct both qualitative and quantitative spatial reasoning from 2D images. The data synthesis pipeline innovatively employs off-the-shelf computer vision models for object detection, depth estimation, segmentation, and captioning for a large-scale spatial VQA dataset, which translates to training VLMs for direct spatial reasoning abilities. Notably, the SpatialVLM amassed a dataset featuring 10 million images resulting in 2 billion spatial reasoning VQA pairs.

Model Training and Evaluation

SpatialVLM utilizes a variant of PaLM-E architecture for training, dedicating a portion of tokens specifically to spatial reasoning tasks. Comparison with contemporary VLMs highlights the effectiveness of SpatialVLM in spatial reasoning benchmarks. Besides, the paper explores the impacts of synthetic data quality and different training strategies on model learning. Notable findings suggest that VLMs can benefit from spatial VQA supervisions without compromising general VQA capabilities and that unfreezing the vision transformer (ViT) encoder is essential for fine-grained distance estimation. Moreover, despite noise in training data, SpatialVLM manages to learn generalizable spatial estimations.

Applications and Contributions

SpatialVLM stands out by functioning as an open-vocabulary, dense reward annotator for robotic tasks, showcasing the practical utility of spatial-aware VLMs. Furthermore, when coupled with a powerful LLM, SpatialVLM facilitates complex chain-of-thought spatial reasoning, elucidating the potential of such models to comprehend and execute multiple-step reasoning tasks. The main contributions of this work are notable, advancing quantitative spatial reasoning capability in VLMs and unveiling a framework for generating an extensive 3D spatial reasoning dataset anchored in real-world imagery. The paper indeed presents SpatialVLM as a front-runner in fostering VLMs for intricate reasoning and robotics applications.