Introduction to \raddino

The ongoing evolution in the AI field continues to showcase significant improvements in the use of deep learning models, particularly in sectors such as medical imaging. A common approach is to train these models using language-supervised pre-training, which involves using text to teach AI systems how to understand and classify images. While this has had considerable success, it also presents challenges, especially when detailed textual data is unavailable or when personal health information must be protected. Here, we introduce and evaluate \raddino, a new biomedical image encoder that breaks away from the norm by using unimodal biomedical imaging data for pre-training.

Beyond Text Supervision

\raddino challenges the traditional reliance on language supervision in the biomedical imaging domain. It presents an alternative approach where medical images are used to train an AI model without the accompanying text data. In assessments on various medical imaging tasks, including classification, semantic segmentation, and vision–language alignment, \raddino was found to perform similarly or better than existing language-supervised models.

Interestingly, \raddino also showcased an enhanced ability to correlate its features with additional medical records that are generally overlooked in radiology reports. This suggests that \raddino can potentially offer a broader and more holistic understanding of the clinical imagery compared to its text-supervised counterparts.

A Deeper Analysis

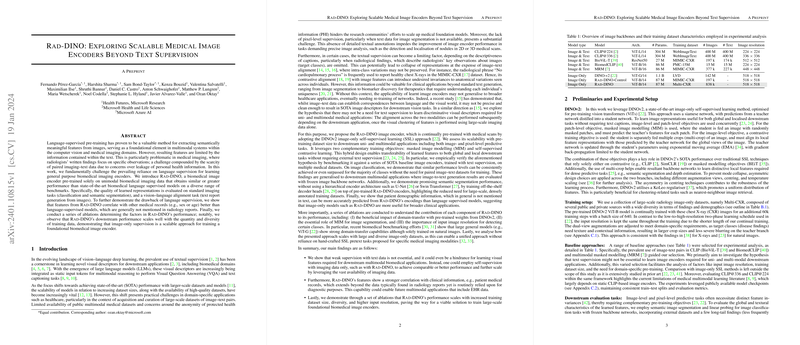

The researchers conducted comprehensive ablation studies to determine the factors contributing to \raddino’s impressive performance. These studies were essential to understand how the image encoder responds to various elements such as pre-training weights from general datasets, the role of masked image modeling, and the impact of image resolution.

Their results established that the beneficial domain-transfer from general image datasets laid a solid foundation for \raddino’s success. They also revealed that masked image modeling is particularly significant for image segmentation, demonstrating the importance of high-quality, domain-specific training data.

Benchmarking \raddino

\raddino’s effectiveness was benchmarked against a series of state-of-the-art models across multiple medical datasets. From image classification to the more complex application of generating text reports from medical images, \raddino held its own. In terms of correlation with patient metadata such as age and sex, which are typically not detailed in text reports, \raddino excelled. This marks an exciting step towards developing AI systems that can generalize better to a variety of real-world medical imaging applications.

Conclusion and Future Implications

The findings suggest a paradigm shift in the way foundational biomedical image encoders can be trained. By leveraging the vast amounts of imaging data while bypassing the restrictions of language supervision, \raddino opens up possibilities for medical AI applications that are more versatile, scalable, and perhaps more attuned to the nuanced needs of healthcare diagnostics. This paper serves as a compelling argument for the AI community to explore self-supervised learning avenues further, particularly in the crucial field of medical imaging.