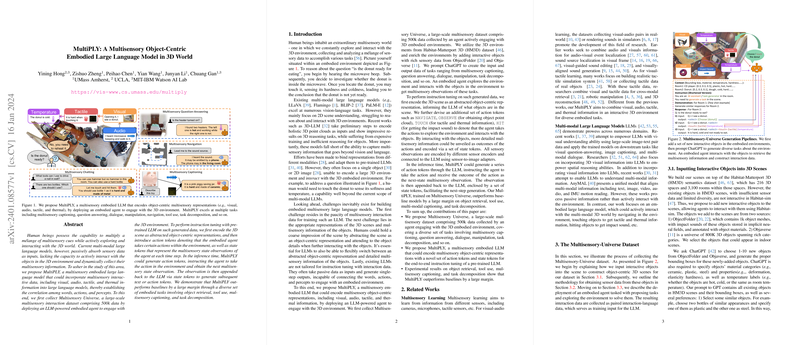

Overview of MultiPLY

The recently introduced MultiPLY framework signifies a transformative approach in LLMs by enabling them to not only absorb multisensory data passively but also to actively interact with three-dimensional (3D) environments. This capability injects an unprecedented level of dynamism into AI agents, allowing them to glean information from the environment through multiple senses—visual, auditory, tactile, and thermal.

Data Collection and Representation

Underpinning this framework is the newly established Multisensory Universe dataset, which provides over half a million instances of multisensory interaction data. To amass this dataset, a virtual agent, powered by an LLM, is deployed within diverse 3D settings to collect observations across several sensory modalities. These 3D environments are abstractly encoded as object-centric representations that inform the LLM of the objects present and their spatial arrangement. Along with this high-level view, the LLM is designed to recognize and employ action tokens that correspond to specific interactions, such as navigating to an object or touching it to acquire tactile information.

State Tokens and Inference

After performing an action, the collected multisensory observation is communicated back to the LLM using state tokens, allowing the model to continuously update its understanding of the environment and determine the next action. This cycle repeats, enabling the agent to methodically explore its surroundings and gather comprehensive sensory data to generate text or further action tokens. MultiPLY's performance exceeds existing baselines across various tasks, including object retrieval, tool usage, multisensory captioning, and task decomposition.

Experimental Findings

Through its unique interactive and multisensory capabilities, MultiPLY demonstrates superiority over previous models that solely process passive data and generate one-off outputs. This is particularly evident in its object retrieval ability, where taking into account the multiple modalities heavily influences the success of identifying the correct object among visually similar candidates. In scenarios that require tool usage, MultiPLY's detailed interaction with its environment allows it to reason more effectively about the functionality of objects given their multisensory attributes, thus providing more accurate solutions. Furthermore, in multisensory captioning tasks, the model's prowess in leveraging various sensory inputs to comprehensively describe objects is evident. Finally, MultiPLY's iterative interaction approach lends itself well to tasks that involve breaking down complex activities into sequential actions.

By establishing a more intricate and closer-to-human method of environmental interaction, MultiPLY marks a significant stride in the direction of embodied AI research. This innovation not only expands the potential uses of LLMs but also enriches the overall modality of how AI systems can learn from and engage with the world around them.