Mixtral 8x7B: A Sparse Mixture of Experts Model Achieving State-of-the-Art Performance

Introduction

The paper introduces Mixtral 8x7B, a model leveraging the Sparse Mixture of Experts (SMoE) technique to significantly advance the effectiveness and efficiency of LLMs in various benchmarks. By employing a dynamic selection of experts for processing input tokens, Mixtral 8x7B not only achieves superior performance in domains such as mathematics, code generation, and multilingual understanding but also presents a model architecture that is notably less resource-intensive during inference compared to its contemporaries.

Architectural Innovations

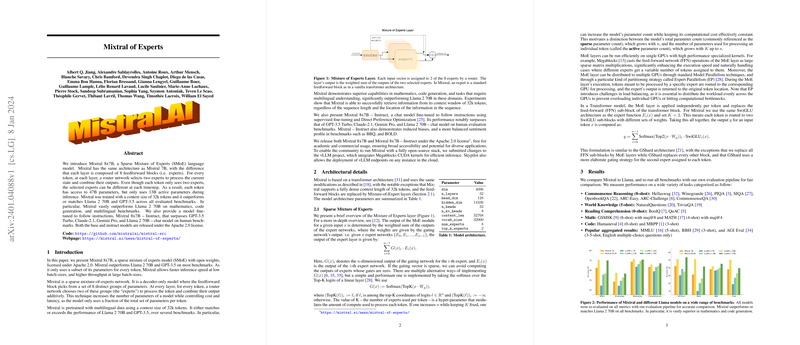

The engineering behind Mixtral is grounded in the transformer architecture, with key innovations in employing a sparse mixture of experts (MoE) layers across its feedforward blocks. Each token's processing is handed off to two dynamically selected expert networks from a pool of eight, allowing each token to be influenced by a diverse set of parameters while maintaining computational efficiency. Key architectural details include:

- Parameter Efficiency: Despite having access to 47B parameters, the model actively utilizes only 13B during inference, markedly reducing computation costs.

- Expert Layer Design: The MoE layer design is optimized for performance, with the selection mechanism ensuring that only the most relevant experts participate in the token processing, guided by a router network. This selective engagement of experts is pivotal for the model's efficiency and performance.

Performance Benchmarks

Mixtral 8x7B's performance is rigorously evaluated across a comprehensive set of benchmarks, where it showcases significant improvements over predecessors like Llama 2 70B and GPT-3.5 in areas critical to AI and language processing tasks:

- Superior performance in mathematics and code generation tasks, displaying the model's ability to understand and generate complex, logical structures.

- Strong results in multilingual benchmarks, emphasizing the model's capacity for processing and understanding multiple languages with high accuracy.

- Demonstrated efficiency in handling long context sizes without a drop in performance, indicative of the model's adeptness at managing extensive information streams.

Instruction Fine-tuning

Mixtral 8x7B undergoes instruction fine-tuning to further refine its ability to follow complex instructions, resulting in the Mixtral 8x7B -- Instruct model. This variant outstrips competitors in human evaluation benchmarks, including models known for their instruction-following capabilities, marking a significant achievement in model responsiveness to user directives.

Analysis of Expert Routing

The paper includes an in-depth analysis of how the model’s router network selects experts for token processing. Interestingly, this investigation reveals that the routing decisions exhibit a high degree of consistency across different types of content, suggesting that the model does not significantly bias expert selection based on content domain. This finding underscores the router's efficiency and the general applicability of expert assignments across varied inputs.

Practical Implications and Future Directions

The release of Mixtral 8x7B under the Apache 2.0 license opens up numerous possibilities for academic and commercial applications, offering a robust, efficient, and highly capable model for a wide range of language processing tasks. The model’s architecture—the efficient allocation of computational resources through expert selection—presents a compelling blueprint for future developments in the field, potentially inspiring subsequent models that balance parameter scale with computational pragmatism.

Conclusion

In conclusion, Mixtral 8x7B sets a new standard in LLMing through its innovative use of a sparse mixture of experts. Its architectural efficiency, combined with superior performance across a broad spectrum of benchmarks, not only demonstrates the model's state-of-the-art capabilities but also highlights the potential for significant advances in AI efficiency and effectiveness. The paper provides valuable insights into expert routing mechanisms, offering a solid foundation for future research in optimizing LLMs for complex tasks across diverse domains.