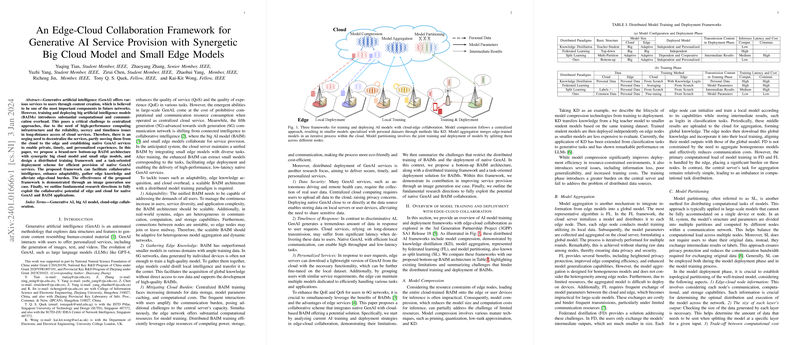

Overview of the Framework

Recent advancements in Generative AI (GenAI) services, particularly those that produce content like images, text, and videos, have called for more efficient deployment solutions. In light of the substantial compute and communication demands of big AI models (BAIMs), the paper underscores the urgency in decentralizing these services to the edge of the network. By integrating small edge models with a larger cloud model, the authors propose a novel edge-cloud collaboration framework designed for native GenAI service provision. This approach aims to reduce the computational load on centralized infrastructures, improve data security, ensure timely responses, and offer personalized services.

The Challenges Addressed

The proposed framework tackles three major challenges: adaptability, edge knowledge acquisition, and mitigation of cloud burden. The model adapts to varying communication, computation, and storage capacities across network nodes and has the ability to learn from local edge data, allowing for the generation of more sophisticated BAIMs. Additionally, a distributed approach to training and deploying models helps lower demands on data storage, processing, and communication on central servers, which aligns with the ecological and economic goals.

Architecture and Model Training Considerations

Focused on the bottom-up BAIM architecture, the paper outlines a two-tiered gating network concept (HierGate) that selects top-performing edge models for each user task. In this setup, the cloud server conducts fine-tuning or freezing strategies on the BAIM, bolstered by updating procedures such as continual learning, pruning, and few-shot learning to harness emerging knowledge from different nodes. This ensures the adaptability of the system to evolving tasks without degrading its core performance.

Demonstrating the Framework with Image Generation

An empirical validation through an image generation case paper is presented, employing variational autoencoders (VAEs) across multiple edge nodes. Engagingly, fine-tuning of the central model on the cloud, followed by edge personalization, stands out in improving the quality of generated images. Quantitative measurements, such as the Frechet Inception Distance (FID), confirm the finetuning strategy's superior effectiveness over alternative training approaches.

Concluding Prospects

Although the introduced framework marks a promising step towards distributed BAIM architecture for native GenAI provisioning, it surfaces several research challenges. Future directions include managing user data securely, creating more robust model fusion schemes, adapting to dynamic edge network changes, and devising steadfast mechanisms against security threats. The paper's insights magnify the prospect that these improvements, both in technology and operational modality, can substantiate the full potential of edge-cloud collaboration in GenAI services.