An Analytical Overview of "CharacterEval: A Chinese Benchmark for Role-Playing Conversational Agent Evaluation"

The paper "CharacterEval: A Chinese Benchmark for Role-Playing Conversational Agent Evaluation" by Quan Tu et al. introduces CharacterEval, a benchmark aimed at evaluating Role-Playing Conversational Agents (RPCAs) using LLMs. This research addresses the gap in comprehensive benchmarks necessary for the advancement of RPCAs, particularly those embedded in the Chinese cultural context.

Dataset Construction and Characteristics

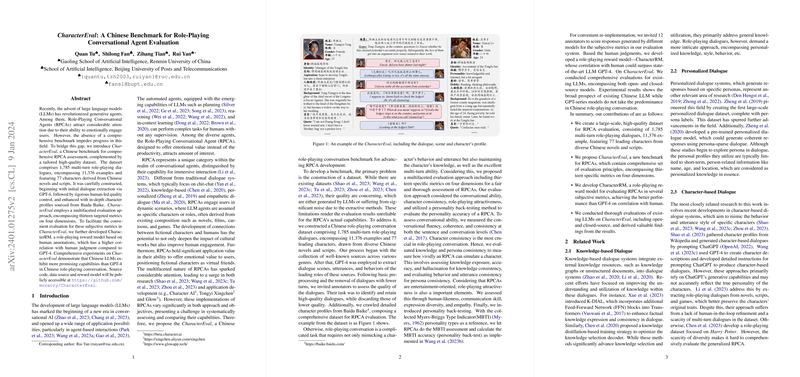

CharacterEval is built upon a richly detailed dataset of Chinese multi-turn role-playing dialogues derived from Chinese novels and scripts. The dataset comprises 1,785 dialogues, 11,376 examples, and features 77 distinct characters. Importantly, these dialogues are constructed through a meticulous process involving GPT-4 for initial dialogue extraction, followed by thorough human-led quality assurance and augmentation with character profiles sourced from Baidu Baike. This ensures that the dataset maintains both depth and authenticity, reflecting the cultural essences embedded within Chinese literary works.

Evaluation Metrics

The CharacterEval framework adopts a multifaceted evaluation methodology, characterized by thirteen metrics distributed across four principal dimensions: conversational ability, character consistency, role-playing attractiveness, and personality back-testing. Such an approach permits a nuanced assessment of an RPCA's technical prowess and its capacity to resonate emotively and authentically with users.

- Conversational Ability: This dimension evaluates the fluency, coherence, and consistency of the conversational responses generated by the RPCA.

- Character Consistency: Being particularly vital, this examines knowledge exposure, accuracy, hallucination, as well as persona behavior and utterance to ensure alignment with a character's established personality and knowledge base.

- Role-Playing Attractiveness: Among metrics like human-likeness and empathy, it assesses the degree to which an RPCA can engage users emotionally, reflecting the essential value these agents promise in entertainment contexts.

- Personality Back-Testing: Implementing a Myers-Briggs Type Indicator (MBTI) approach, it measures how accurately an RPCA embodies and portrays the intended personality.

Empirical Insights

The experimental evaluations reveal a significant insight: Chinese LLMs demonstrate more promising capabilities than GPT-4 in emulating the nuances of Chinese role-playing dialogues. Across the dimensions, Baichuan2 and InternLM, among other Chinese LLMs, exhibit strong performance marks. Conversely, GPT-4's relatively diminished efficacy in this specific context underlines the challenges faced by models not primarily trained on Chinese linguistic and cultural datasets.

Theoretical and Practical Implications

The introduction of CharacterEval marks an important step towards the systematic assessment and development of RCPAs. The paper’s findings suggest that tailored LLMs, closely aligned with culturally and contextually rich datasets, will lead the optimization of RPCA design. This aligns with the view that specificity in model training and evaluation, informed by cultural context, is likely to yield superior performance outcomes.

Moving forward, this benchmark can be pivotal in further exploration of RPCA capabilities, suggesting a path toward the refinement of models specializing in culturally specific role-playing interactions. It may also pave the way for robust AI systems tasked with deep, human-like emulations in diverse literary and fictional domains.

Overall, CharacterEval stands as a critical resource for evaluations in the field of conversational AI focused on emotional engagement and culturally-rich interactions, thereby catalyzing further research and development in the field.