Leveraging LLMs for Android Vulnerability Detection

Introduction

The landscape of Android application security remains fraught with challenges, necessitating the exploration of new and effective vulnerability detection methodologies. Traditional static and dynamic analysis tools, while useful, often suffer from limitations such as a high volume of false positives and a restricted scope of analysis, making their adaptation cumbersome. Recent advancements in machine learning have opened new avenues for vulnerability detection; however, these approaches are limited by extensive data requirements and complex feature engineering. Amidst these developments, LLMs have emerged as potentially transformative tools due to their sophisticated understanding of both human and programming languages. This paper explores the practicality of utilizing LLMs, specifically in the field of Android security, aiming to construct an AI-driven workflow to aid developers in identifying and mitigating vulnerabilities.

Prompt Engineering and Retrieval-Augmented Generation

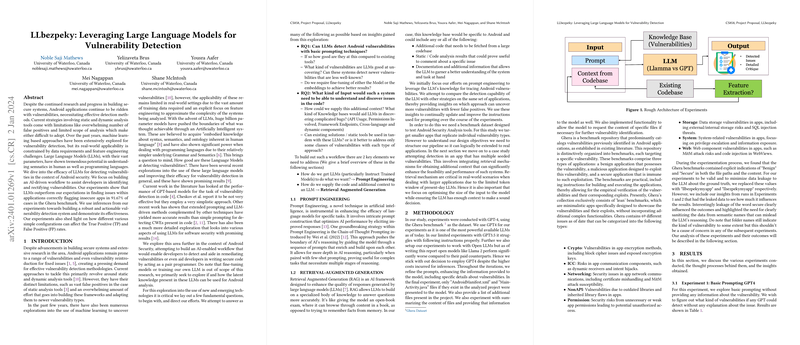

At the crux of leveraging LLMs for vulnerability detection are two key techniques: Prompt Engineering and Retrieval-Augmented Generation (RAG). Prompt Engineering involves crafting intricate prompts that optimize LLM performance for specific tasks, with innovative strategies like Chain-of-Thought Prompting showing notable promise. RAG, on the other hand, empowers LLMs to augment their responses with information retrieved from an external knowledge base. These methodologies promise a nuanced approach to vulnerability detection by providing LLMs with the context necessary for accurate analysis.

Methodology and Experiments

The paper employs GPT-4 for its experiments, utilizing the Ghera benchmark as the primary dataset. The methodology is articulated through a series of experiments aimed at understanding the LLM's ability to detect Android vulnerabilities with various levels of context. For instance, Experiment 1 explores the LLM's inherent detection capability with minimal prompting, while subsequent experiments enrich the LLM's context through summaries of vulnerabilities and enabling file-specific requests. These experiments reveal that LLMs, particularly GPT-4, exhibit a strong potential for accurately identifying vulnerabilities, provided they are given sufficient context.

Results and LLB Analyzer Development

The experiments underscore the significant promise of LLMs in detecting vulnerabilities, with the capability to correctly flag insecure apps in a substantial fraction of cases. Building on these insights, the paper introduces the LLB Analyzer, a Python package designed to automate Android application scanning for vulnerabilities. This tool integrates the learnings from the experiments, offering a flexible and user-friendly interface for application security analysis.

Case Studies and Discussion

The paper further validates the efficacy of the LLB Analyzer through case studies, including an analysis of the Vuldroid application. These real-world applications underscore the practical utility of the tool, successfully identifying a majority of seeded vulnerabilities. Moreover, the discussion section reflects on the broader implications of the paper, highlighting the potential for integrating LLMs with traditional analyses to enhance vulnerability detection capabilities.

Conclusion

This paper represents a significant stride towards harnessing the capabilities of LLMs for the enhancement of Android application security. Through a series of methodical experiments and the development of the LLB Analyzer, it demonstrates the practical applicability of LLMs in identifying and mitigating vulnerabilities. The research not only offers a novel approach to vulnerability detection but also sets the stage for future explorations in integrating AI-driven methodologies with software engineering practices to bolster system security.

Acknowledgments

The paper concludes with acknowledgments to the support and guidance provided by the academic and administrative staff at the University of Waterloo, emphasizing the collaborative effort that underpinned the research.