Introduction to Parameter-Efficient Tuning of LLMs

The evolution of LLMs in software engineering has led to enhanced performance in tasks such as code comprehension and code generation. Current advancements point towards instruction-tuned Code LLMs that are tailored to understand human instructions and perform across a variety of tasks without specific task-oriented fine-tuning. However, as models become larger, fully fine-tuning every parameter (FFT) becomes prohibitively costly, pushing the field towards more efficient strategies, namely Parameter-Efficient Fine-Tuning (PEFT) methods. This paper evaluates these PEFT methods across different model scales to determine their impact on model performance, robustness, and security.

Analyzing the PEFT Methods

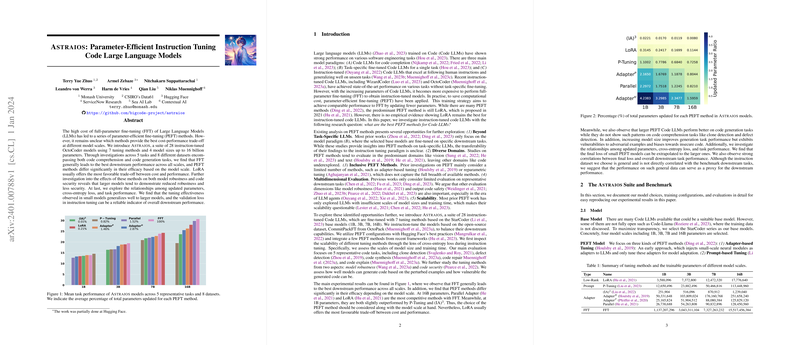

Researchers developed Astraios, a framework featuring 28 instruction-tuned models based on the OctoCoder model with up to 16 billion parameters. This set includes adjustments using 7 different PEFT methods. Several tasks, including code generation and code comprehension, were tested on multiple datasets to meticulously evaluate the models. The findings indicate FFT tends to outperform PEFT at scale, yet efficiency varies by model size, with LoRA often presenting as the optimal balance between cost and effectiveness.

Model Scaling and Fine-Tuning Impact

Interestingly, larger models excel in code generation tasks but do not extend the same pattern to code comprehension. Moreover, these sizable models are prone to decreased robustness and heightened security vulnerabilities, which suggests larger instruction-tuned Code LLMs face a trade-off between generating high-quality code and staying secure and reliable against adversarial inputs. The researchers also observed a strong correlation between tuning validation loss and downstream performance, indicating that tuning loss can serve as a proxy for the model's broader capabilities.

Model Robustness and Security

Beyond task execution efficiency, the paper underscores the significance of model robustness and security. Evaluation with perturbed data and security-focused benchmarks revealed that models with fewer updated parameters can sometimes offer greater robustness. However, an increase in model size correlates with diminishing robustness and a tendency to generate insecure code more frequently.

Concluding Thoughts

The paper's exploratory journey through model fine-tuning emphasizes the intricate relationships among size, costs, performance, robustness, and security. With a comprehensive model suite, Astraios enables an in-depth understanding of these dynamics and provides critical insights into the path forward in developing more sophisticated and reliable Code LLMs.

Acknowledgements and Contributions

The research benefited from contributions and support from numerous institutions, individuals, and the community, fostering collaborations that span across academia and industry, highlighting the collective effort in the advancement of AI and machine learning in software engineering.