Introduction to the Study

Advancements in natural language processing have been propelled by the development of LLMs, which are highly sophisticated models capable of understanding and generating human-like text. Notable examples of these models include GPT-3, OPT, and PaLM. LLMs are incredibly parameter-dense, often having a size that challenges the storage and computational capabilities of many devices, particularly those with constrained DRAM capacity. This paper introduces an innovative method that enables LLM inference on such devices by leveraging flash memory, which is typically higher in capacity compared to DRAM, without the need to load the whole model into DRAM at once.

Flash Memory & LLM Inference

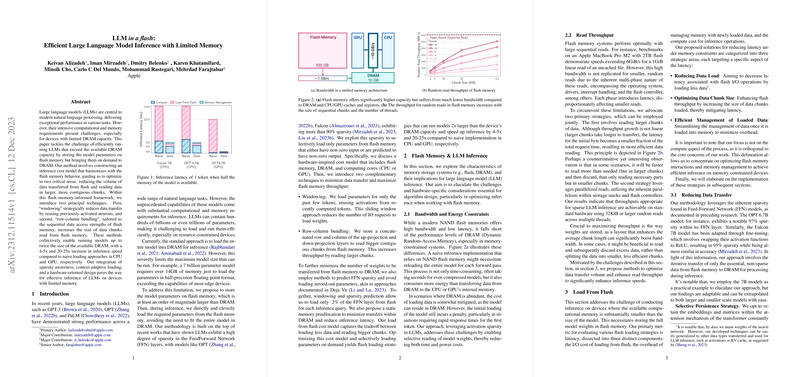

The core of the challenge boils down to the discrepancy between the high capacity of flash memory and the faster speeds of DRAM. Traditionally, running an LLM requires loading the entire model into the quick-access DRAM. This is not feasible for very large models on hardware with limited DRAM capacity. The authors' method circumvents this limitation by directly reading only the necessary model parameters from flash memory during inference. This technique is based on two key principles: reducing the volume of data transfer from flash and reading data in more substantial and sequential blocks, aligning with how flash memory performs best.

Load From Flash

The authors further describe a "windowing" technique, which involves only loading parameters related to the most recent tokens, thereby reusing previously activated data and reducing the number of I/O requests to flash memory. Moreover, they introduce "row-column bundling," a method that combines associated matrix rows and columns for larger contiguous data reads. These strategies, coupled with a focus on sparsity within model layers, significantly reduce the amount of data needing to be loaded from flash memory. The approach is designed to selectively load only the non-zero and more likely to be non-zero parameters, therefore minimizing the amount of necessary memory traffic.

Significant Findings

Implementing these techniques, the paper shows that it is possible to run LLMs that are up to double the size of the available DRAM with substantial speed gains. In particular, the researchers achieved an inference speed increase of 4 to 5 times on CPU and 20 to 25 times on GPU relative to more naive loading strategies. These results offer a substantial contribution to the field of AI by enabling more efficient utilization of LLMs on a variety of devices, thus widening the scope of potential applications. The paper stands as an example of the significance of considering hardware limitations in the design of machine learning algorithms, particularly those that are resource-intensive.

Conclusion and Future Implications

The work accomplished in this paper paves the way for numerous new possibilities where LLMs can be utilized effectively on devices previously deemed unsuitable due to memory constraints. This not only democratizes access to state-of-the-art AI capabilities but also invites further research dedicated to optimizing the performance of such models, ensuring their widespread adoption across various platforms. The intersection of hardware-aware algorithm development and machine learning, as showcased in this paper, is likely to remain a crucial area of focus as the models continue to grow in scale and potential.