Introduction to Pixel-Aligned LLMs

The growing proficiency of AI in interpreting and generating natural language has been propelled further by the integration of visual inputs. Advanced vision-LLMs have displayed remarkable abilities, ranging from describing images in text, responding to visually rooted questions, to executing complex image reasoning tasks. Nonetheless, the arena of localization within images, notably tasks such as word grounding or directing location, using LLMs has remained rather ambiguous.

The Pixel-Aligned LLM

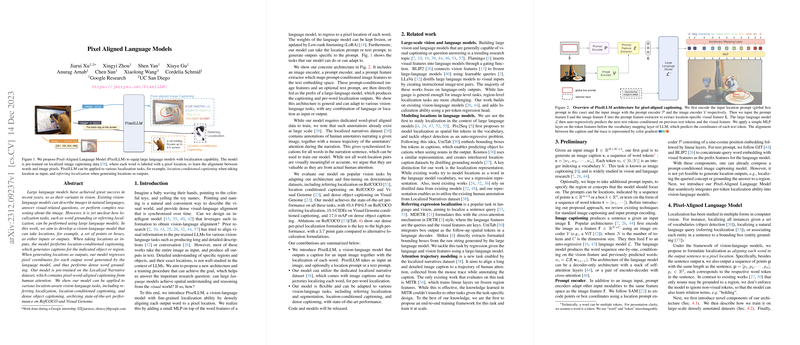

In an effort to address this gap, a novel approach called the Pixel-Aligned LLM (PixelLLM) has been introduced. This model is trained to recognize the spatial alignment between visual contents and textual descriptions. It is capable of both generating captions based on a given image and identifying pixel location for each caption word.

PixelLLM operates by accepting an image and either a set of points, boxes, or even textual prompts. When provided with these location prompts, it delivers captions focused on the specific requested areas. Conversely, when generating output, PixelLLM regresses pixel coordinates for each textual word, thus attaining dense word grounding capabilities. As a foundation, the model utilizes the Localized Narrative dataset, which comes enriched with pixel-word-aligned captions birthed from human attention.

Features and Flexibility of PixelLLM

PixelLLM showcases substantial versatility:

- It offers localization capabilities by taking an image and an optional text or location prompt as input and generating sentences along with localization for each word.

- The architecture allows the model to adapt to different vision-language tasks by accepting any combination of text or location as either the input or output.

- It has been trained on human-annotated captioning data, which includes image captions and trajectories that localize each word.

The model’s novel components include an image encoder and a prompt encoder. It capitalizes on visual features extracted from images and combines them with prompts to facilitate conditioned outputs.

Performance and Applications

PixelLLM's performance is notable in several key areas. It achieves state-of-the-art results on tasks such as referring localization on the RefCOCO datasets and dense object captioning on the Visual Genome dataset. Its success is underpinned by its unique formulation of per-pixel localization, driving it to outperform other localization methods that use raw strings or extra tokens to encode location sparsely.

Further showcasing PixelLLM's strengths, the model demonstrates proficiency in location-conditioned captioning, reflecting its nuanced understanding of visual regions and object-specific descriptions.

Conclusion

The introduction of PixelLLM is a significant stride in vision-LLMing. Through combining the power of LLMs with the intricacy of spatial understanding, this research could pave the path for AI systems that more naturally interact with both the visual and textual world. The promising outcomes suggest a bright future where AI can intricately weave together the realms of vision and language, potentially revolutionizing these interconnected fields.