Understanding Agent Attention in Transformers

Transformers are a class of deep learning models that have revolutionized the field of natural language processing and have also made significant inroads in computer vision. The Transformer's power primarily comes from its attention mechanism, which helps the model to focus on different parts of the input data to make better predictions. However, traditional global attention mechanisms in Transformers can be computationally expensive, particularly when dealing with a large number of input tokens, as in high-resolution images.

Towards Efficient Attention Mechanisms

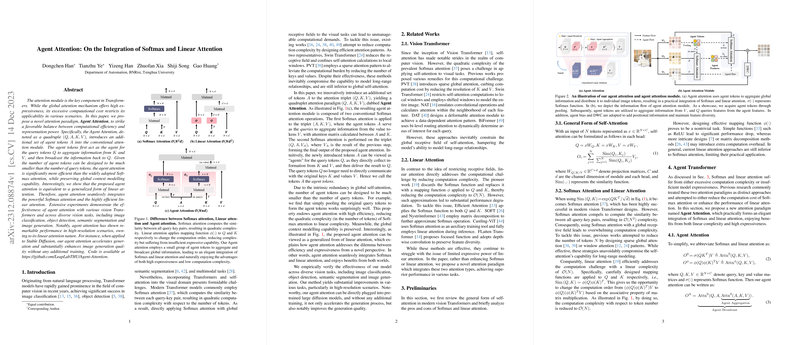

In the latest development, researchers have introduced a novel attention paradigm called "Agent Attention" to address the computational efficiency challenges of global Softmax-based attention in Transformers. This new approach effectively strikes a balance between computational efficiency and representation power. Unlike Softmax attention, which considers the similarity between all query-key pairs, resulting in quadratic computational complexity, Agent Attention introduces an additional set of tokens, termed "agent tokens". These tokens serve as intermediaries, aggregating information from keys and values before broadcasting it back to the queries.

Agent Attention Mechanics

Agent Attention is structured as a quadruple (Q, A, K, V), adding the agent tokens A into the conventional attention module structure. This architecture performs two sequential attention computations: first, agent tokens collect information from values using a Softmax operation between A and K; second, queries gather features from the aggregated agent features. The key innovation is that the number of agent tokens can be much smaller than the number of queries, leading to significant computational savings while maintaining the global context modeling capabilities.

Integration with Linear Attention

Interestingly, Agent Attention is also shown to be equivalent to a generalized form of linear attention, which historically has been simpler but less expressive. This equivalence allows the new attention model to inherit the benefits from both Softmax's expressiveness and linear attention's efficiency. It marries the best of the two worlds: the expressiveness of Softmax attention and the efficiency of linear attention in a seamless manner, which is empirically demonstrated through various vision tasks.

Empirical Verification

The effectiveness of Agent Attention has been tested across a spread of vision tasks, including image classification, object detection, semantic segmentation, and image generation. In each test case, the new attention mechanism provided computational advantages and, in some cases, even improved performance over traditional attention mechanisms. Remarkably, when incorporated into large diffusion models like Stable Diffusion, it accelerated image generation without any additional training while enhancing image quality.

Implications for Future Applications

The efficient nature of Agent Attention, due to its linear complexity with respect to the number of tokens and strong representational capacity, is poised to be transformative for tasks dealing with long sequences of data, such as video processing and multimodal learning. Considering its potential, Agent Attention aligns with the broader trajectory of making Transformer models increasingly scalable and applicable to ever more complex and data-intensive domains.