Introduction

Sequential planning in high-dimensional, partially observable environments poses significant challenges for artificial intelligence. Traditional algorithms quickly become intractable when faced with large state and action spaces. Reinforcement learning (RL) approaches, while useful to some extent, are limited by their reliance on reward signals to develop viable plans. Generative AI approaches, and in particular, LLMs, open new possibilities in this landscape by generating action plans through natural language. They encapsulate real-world knowledge acquired through extensive pre-training. However, they fall short in their planning capabilities due to limited reasoning about actions over text and struggle with exploration. This paper introduces "neoplanner," a hybrid agent that synergizes state space search with queries to foundational LLMs to formulate better action plans.

Approach

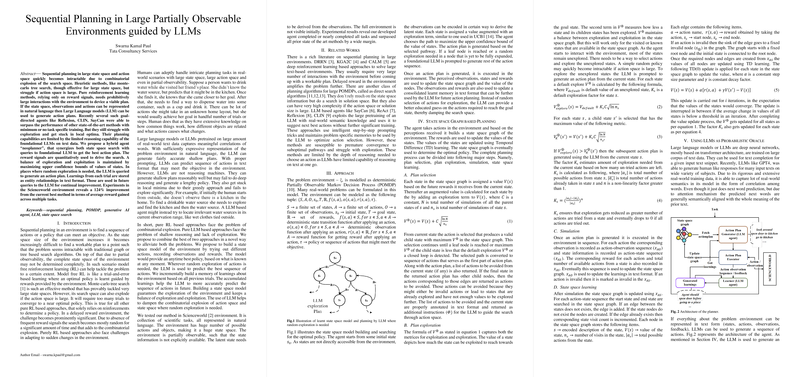

Neoplanner assesses the environment through the lens of a deterministic Partially Observable Markov Decision Process (POMDP) and leverages both RL and generative AI strategies. The agent iteratively builds a model of the environment and refines its understanding of state transitions and rewards. During this process, it uses LLMs to suggest action plans where random exploration is needed, progressively improving the quality of suggestions with each iteration through its growing text-encoded environmental knowledge.

State Space Graph-Based Planning

The developed agent maintains a balance between exploration and exploitation by constructing and updating a state space graph using observed interactions with the environment. It assigns values to states based on the learned policy and augments them with an exploration term, following the principle of Upper Confidence Bounds (UCB1). To address large unexplored state spaces, the agent relies on LLMs to fill in gaps in the action plan, thereby damping the search space explosion typically experienced with pure RL approaches.

Experimental Results

Experiments conducted within the Scienceworld environment reveal that neoplanner achieves a substantial increase in performance compared to existing state-of-the-art methods. Utilizing natural language as a medium for expressing the state space and action possibilities allows the LLM within the agent to craft relatively accurate shallow plans, which are then honed through actual interaction with the digital world.

Conclusion

Neoplanner's hybrid design affords it advantages not present in pure RL or LLM-based methods alone. By effectively combining the global optimization capabilities inherent in state space exploration with the nuanced natural language processing abilities of LLMs, neoplanner succeeds in navigating vast POMDPs efficiently. The continuous integration of experiential knowledge further sharpens the agent's capacity to formulate and execute sophisticated plans, demonstrating a remarkable improvement over current techniques and solidifying its potential for complex problem-solving in dynamic environments.