Understanding the Paper's Proposition

An intelligent virtual assistant powered by a LLM-based Process Automation (LLMPA) has been presented in this paper. This advanced system is capable of interpretive multitasking and complex goal achievement within mobile applications, which is a noticeable evolution from today's virtual assistants like Siri and Alexa. Unlike these conventional assistants, which rely heavily on predefined functions, the proposed system can imitate detailed human interactivity for task completion. This human-centric design increases adaptability, allowing for more complex procedures based on natural language directions.

Breaking Down the LLMPA System

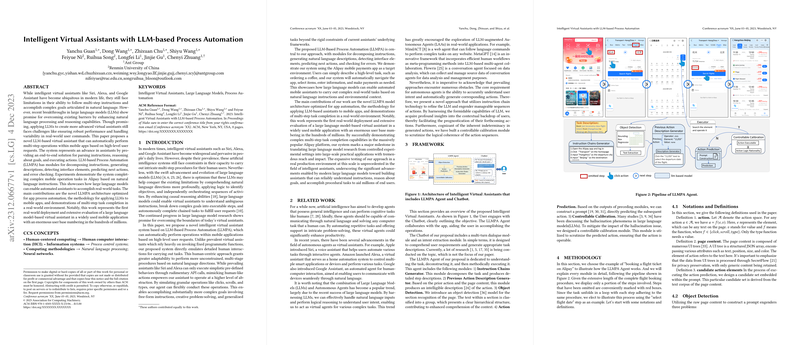

The LLMPA system, fundamental to the assistant's functioning, includes several modules to ensure smooth operation:

- Decomposing Instructions: Transforms user requests into detailed step descriptions.

- Generating Descriptions: Provides a clear understanding of previous actions based on page content.

- Detecting Interface Elements: Uses an object detection module for accurate section recognition of the app page.

- Predicting Actions: Establishes prompts for anticipating subsequent user actions.

- Error Checking: Implements controllable calibration to maintain logical coherence and operability of action sequences.

In demonstrative trials, the system adeptly handled tasks in the Alipay mobile application, a testament to its potential in complex task execution through natural language commands.

Assessing the Technology

The researchers extensively evaluated the proposed system's capabilities and reported substantial achievements. They claim it's the first large-scale, real-world deployment of a LLM based virtual assistant in a mobile application with a substantial user base. The assistant's proficiency is touted by the completion of intricate multi-step tasks, a strong indication of the progression in natural language understanding and action prediction.

Looking Ahead

While the virtual assistant shows promise, the discussion in the paper also highlights certain challenges. Notably, the need for expansive and diverse training data to handle unpredictable user queries, and the resource-intensive nature of LLMs which is a challenge for mobile deployment.

Future directions suggest the potential for extending the training dataset, enhancing the model architecture, and optimizing system components to better serve mobile platforms. Todd's real-world usage and iterative improvements based on user feedback will be crucial in refining the assistant's capabilities further.

In conclusion, the paper reflects a meaningful stride in AI-driven virtual assistants, indicating a near future where more intuitive and capable systems could become an integral part of our daily digital interactions.