Overview of CrossLM Framework

The CrossLM framework presents an innovative approach that facilitates the enhancement of both LLMs and Small LLMs (SLMs) through a strategy that bypasses the need for direct data sharing. This is particularly important in scenarios where privacy concerns and data governance regulations restrict the usage of domain-specific data for model training.

Addressing Privacy and Resource Constraints

The novel aspect of CrossLM is rooted in its ability to expand the utility of federated learning for LLMs without imposing the concomitant resource burdens that typically accompany such models. Previous methods have either relied on updating a subset of LLM parameters or splitting the model training across the client and server—which pose their own challenges including significant resource demands and potential privacy issues.

CrossLM's Collaborative Training

CrossLM distinguishes itself by implementing a client-server collaborative training framework where clients' SLMs are tailored to the respective resource capabilities and privacy needs of each client. CrossLM's technical crux is its data-free knowledge transfer, catalyzed by the generative strengths of an LLM to synthesize task-specific datasets. These datasets are then subject to a feedback loop with the SLMs to refine the quality of the LLM's output. This mutualistic relationship fosters an environment where both the LLM and SLMs guide each other toward improved task-specific performance.

Experimental Validation

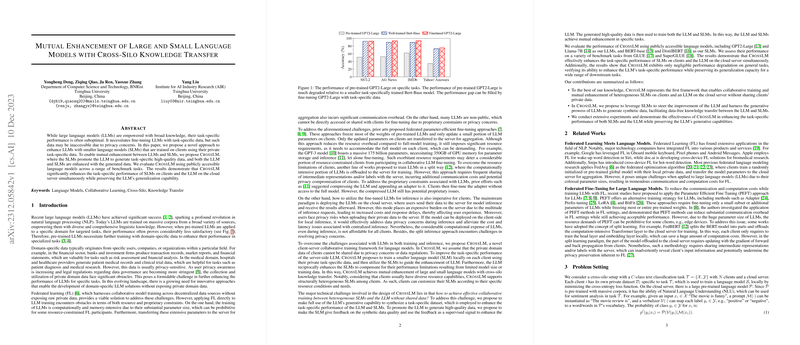

Empirical evaluations showcase CrossLM's proficiency in enhancing task-specific performances of SLMs by an average of 5.8% to 7.8%, a considerable margin over standalone methods. Furthermore, when compared to Data-free Knowledge Distillation (KD), CrossLM still achieves an additional accuracy improvement of 2% to 2.7%. The LLM's prowess in both natural language understanding (NLU) and generation (NLG) is significantly bolstered post-CrossLM training, as evidenced by accuracy boosts of 18.3% for GPT2-Large and 13.6% for Llama-7B.

Preserving Generalization Capabilities

A critical aspect of CrossLM is the retention of the LLM's generalization capabilities after task-specific enhancement. The empirical findings suggest only marginal performance regressions on unrelated benchmark tasks, signaling that the LLM's broad applicability remains intact.

Concluding Thoughts

CrossLM emerges as an elegant solution that strikes a balance between enhancing task-specific performance and preserving generalization without compromising client data privacy. Its approach not only addresses resource limitations but also adapts to heterogeneous model structures, offering a versatile tool in the practitioner's kit for federated LLM training. The framework's synchronous and one-shot learning characteristics add to its practical appeal, marking a step forward in the evolution of collaborative AI model training while safeguarding data privacy.