Introduction

Transformer models have become increasingly effective at solving complex language processing tasks by pre-training on extensive text corpora. LLMs benefit from this approach, offering versatile capabilities across various text-based applications. However, an obstacle often encountered with LLMs is their high computational demand during inference. This limitation is particularly pronounced when processing a large number of samples with extended contexts, leading to significant memory and bandwidth requirements. Addressing this challenge, a new technique known as SparQ Attention has been introduced, aiming to enhance inference efficiency by selectively fetching relevant portions of cached history within the attention mechanism.

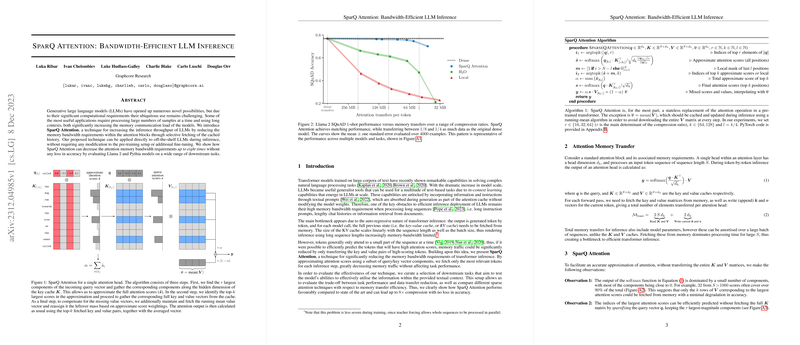

SparQ Attention Algorithm

SparQ Attention improves the efficiency of LLMs during inference by optimizing the attention mechanism's memory bandwidth usage. The technique works through three sequential steps. Initially, it locates the most significant components of the incoming query vector, then approximates initial attention scores using these components. Next, it captures the full key and value vectors for top-scoring tokens only. The final step amalgamates the results from the previous steps, interpolating the top scores with a running mean of the value vectors. Notably, this approach can reduce memory bandwidth demand up to eight times without loss in accuracy, and it can be directly applied to existing LLMs without altering pre-training setups or requiring additional fine-tuning.

Experiment and Results

The practical efficacy of SparQ Attention was tested across a variety of downstream tasks, including question answering, summarization, LLMing, and textual repetition. These tasks were designed to assess model performance in the presence of reduced data transfers and to pit SparQ Attention against other sparse attention methodologies. Exemplary performance was demonstrated using models such as Llama 2 and Pythia, with up to a billion parameters, across tasks that required long-sequence context processing. The technique was found to be robust, with bandwidth compression ratios ranging from 2× to 8×, often with negligible degradation in task performance.

Discussion and Related Work

This paper fits within a broader context of research that strives to improve the efficiency of attention mechanisms, including work on sparse attention and reduction of memory footprint in LLMs. Previous studies have introduced various models designed to improve efficiency, but many require modifications during the pre-training phase and may have trade-offs in task performance. In contrast, SparQ Attention stands out as it can be applied during inference to models without adjustments to their pre-trained weights. Despite showing substantial improvements in memory bandwidth reduction, SparQ Attention has its limitations; it conservatively manages memory and may have unexplored effects when combined with different transformer model variants. Future research may extend its applicability or overcome these limitations, potentially augmenting its role in efficient LLM inference further.