LLMs: Exploring Gated Convolutions and Associative Recall

The paper, titled "Zoology: Measuring and Improving Recall in Efficient LLMs," presents an in-depth analysis of attention-free LLMs, particularly those utilizing gating and convolutions. It aims to understand their performance relative to traditional attention-based models, particularly in terms of associative recall (AR).

Overview

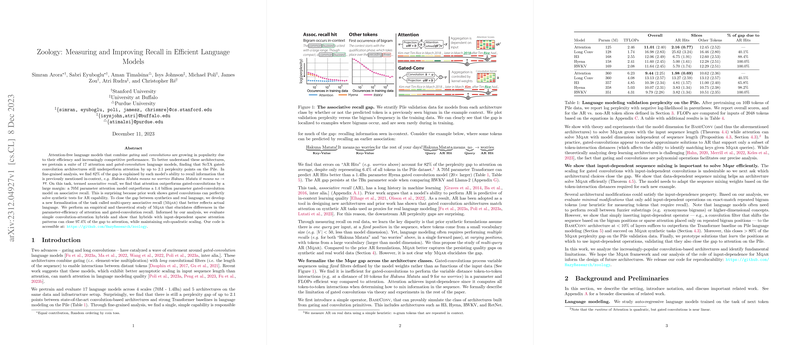

The paper pretrains 17 LLMs across various scales and architectures to evaluate their performance against attention-based models. Key findings show that state-of-the-art gated-convolution architectures underperform attention-based models by up to 2.1 perplexity points on the Pile dataset. Notably, 82% of this gap is attributed to each model's ability to perform associative recall.

Associative recall, pivotal in LLMing, involves recalling information previously mentioned within the context. The paper highlights that a 70M parameter attention model surpasses a 1.4 billion parameter gated-convolution model in AR capability.

Associative Recall and Multi-Query Tasks

The paper introduces a novel task, multi-query associative recall (Mqar), which epitomizes the challenges faced by gated-convolutions in real language scenarios. The Mqar task requires models to perform multiple recalls at varying positions within a sequence, emphasizing the inherent differences in input-dependent processing.

Implications of the Study

The empirical and theoretical assessments indicate that gated-convolution models require model dimensions that scale with sequence length to solve Mqar effectively. Attention models, however, handle this with consistent dimensionality, showcasing their superior parameter efficiency. To bridge this gap, the authors explore hybrid models that blend convolutional and attention mechanisms. These hybrids demonstrate a 97.4% closure of the gap to attention models while maintaining sub-quadratic scaling.

Practical and Theoretical Contributions

From a practical standpoint, the research suggests architectural modifications to existing gated-convolution models. By incorporating input-dependent sparse attention patterns, such modifications can achieve near-parity with attention-based models, significantly improving AR performance while remaining computationally efficient.

Theoretically, the paper extends our understanding of LLM architectures, challenging the notion that attention alone is the superior approach. It presents a compelling argument for the integration of input-dependent computations to enhance associative recall capabilities in LLMs.

Future Directions

The exploration of gated-convolution models signals promising pathways for future research in AI. As the paper suggests, incorporating input-dependent sequence mixing could spur innovations in model architectures that balance efficiency and performance. Future work might extend to exploring other architecture classes and their interactions with associative recall tasks, potentially leading to groundbreaking advancements in efficient AI systems.

In conclusion, this paper makes significant strides in dissecting LLMing architectures, particularly focusing on the crucial task of associative recall. Its insights offer practical implications for model design and theoretical contributions to our understanding of sequence processing in AI systems.