Introduction

In the field of vision-LLMs (VLMs), advancements have been significant, yet the complexity of certain visual tasks still presents a challenge. These tasks require not just object identification but also spatial understanding and the retrieval of contextual knowledge. Although LLMs have shown aptitude in generating executable code to tackle sophisticated tasks, the programs they produce are prone to errors and inefficiencies, often missing crucial steps or including unnecessary ones. To overcome these obstacles and minimize computational costs, a novel framework called Visual Program Distillation (VPD) has been proposed.

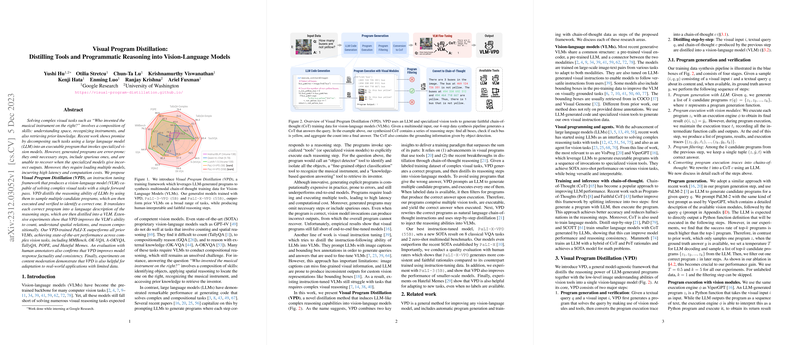

Program Generation and Verification

VPD begins by generating multiple candidate programs using an LLM to solve a given task. These programs are then executed using specialized vision modules. A verification process follows to identify the correct program. For tasks with available labeled data, programs are filtered based on their output's correctness. Execution traces of the programs are recorded, detailing the usage of various vision tools during the process.

Distilling Step-by-Step

After identifying the correct program for a task, the next phase involves translating the program's execution trace into a natural language description of the reasoning steps, often referred to as a chain-of-thought (CoT). This CoT is then distilled into the VLM, with the aim of imbuing it with the same programmatic reasoning capabilities. This distillation process is crucial for improving the VLM’s abilities to count, decipher spatial relationships, and perform compositional reasoning.

Empirical Evidence of Efficiency

The VPD-trained model, referred to as PaLI-X-VPD, demonstrates state-of-the-art performance across several complex vision tasks, surpassing previous VLMs. It achieves this while also providing human-readable reasoning steps. Human annotators confirm that VPD enhances the factuality and consistency of model responses. Separate experiments in content moderation indicate the versatility of VPD, showcasing its utility in real-world applications even with limited data availability. The framework's inherent ability to generate accurate executable programs and distill complex reasoning into VLMs reveals its potential as a transformative approach in the field of AI.