Introduction to DreamVideo

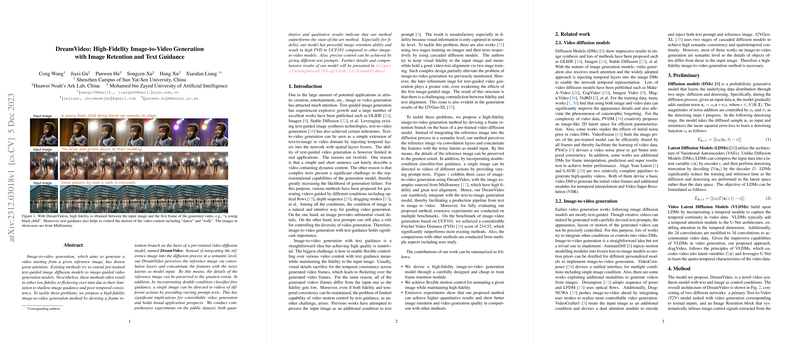

The innovation of technology in the field of generative models has made significant progress in the ability to create realistic videos from still images, also known as image-to-video generation. DreamVideo is an advanced model within this domain that aims to preserve the fidelity of a reference image when generating a video clip. Notably, this model offers not only high-fidelity transformations but also enables users to direct the action within the video using textual descriptions.

Underlying Technology

DreamVideo is built upon pre-existing video diffusion models, which are a type of probabilistic generative model. Diffusion models work by incrementally adding noise to an image and then learning to reverse this process—essentially 'denoising' to generate new data. DreamVideo uniquely maintains the details of a static input image through the generation process by utilizing a dedicated frame retention branch in its architecture. This branch takes the reference image, processes it through convolution layers, and combines the features with the nosy latents as model input, which assists in preserving the original image details during video generation.

Furthermore, DreamVideo incorporates what is known as classifier-free guidance. This technique enhances the model's control over the transformation process, allowing a single static image to evolve into videos of multiple actions simply by altering the text description provided.

Performance and Applications

Extensive experiments and comparisons with other state-of-the-art models demonstrate DreamVideo's superior capabilities. Measured using quantitative benchmarks such as the Fréchet Video Distance (FVD) and qualitative user studies, DreamVideo has showcased more significant image retention and control over the video content. Its unique contribution lies in its ability to allow different resulting videos from the same image using different text prompts and its robustness in maintaining image detail fidelity throughout the video generation process.

Conclusion

The DreamVideo model represents a remarkable step forward for image-to-video technology, offering flexibility in animation control without sacrificing image quality. Its high fidelity and precision in control through textual guidance broaden the possibilities for applications in areas like digital art, film, and entertainment, as well as practical applications that require detailed video demonstrations from static images. DreamVideo sets a new benchmark for performance in image-to-video generation models, signaling exciting future advancements in this creative technology field.