Compositional Chain-of-Thought Prompting for Large Multimodal Models

The paper "Compositional Chain-of-Thought Prompting for Large Multimodal Models" presents a novel approach to enhance the compositional reasoning capabilities of Large Multimodal Models (LMMs) without the necessity for model fine-tuning or costly annotated scene graph data. This approach addresses a significant challenge prevalent in LMMs: their current limitation in effectively capturing the compositionality of visual scenes, especially in terms of object attributes and inter-object relationships.

Overview of the Methodology

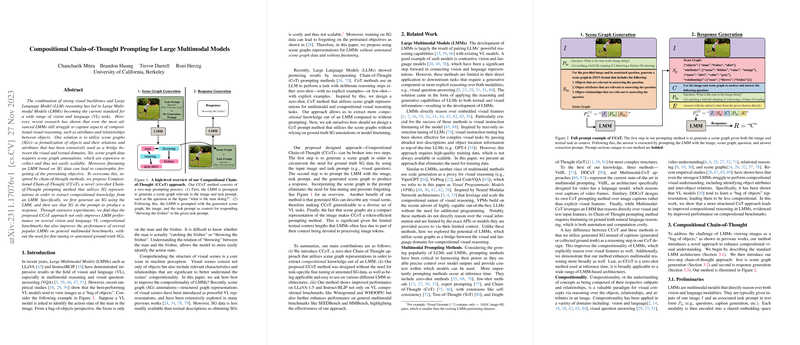

The proposed method, termed Compositional Chain-of-Thought (CCoT), employs a zero-shot chain-of-thought prompting strategy. This method consists of two critical stages:

- Scene Graph Generation: Initially, the LMM is prompted to generate a scene graph (SG) representation, facilitating the extraction of compositional knowledge from the visual input. Scene graphs are structured descriptors encapsulating objects along with their attributes and relationships, better aligning with the intrinsic complexity of visual data compared to plain image captions. The paper highlights that this SG generation does not require ground-truth annotations, leveraging instead the LMM's intrinsic capabilities to produce them on-the-fly.

- Response Generation: The generated SG is then used as an intermediary reasoning step in a secondary prompt, which provides a structured basis for the LMM's response to a given multimodal task. This method capitalizes on the Zero-Shot Chain-of-Thought (CoT) reasoning, supplying the LMM with a comprehensive contextual foundation without the need for further training. The SG, encapsulating detailed image-context, amplifies the LMM's ability to comprehend and respond to complex visual scenes and tasks.

Results and Implications

Quantitative evaluation reveals that the CCoT approach significantly boosts the LMM performance across several benchmark datasets, including Winoground, WHOOPS!, SEEDBench, MMBench, and LLaVA-Bench. Specifically, improvements are evident in both general multimodal reasoning and tasks demanding high compositionality. Notably, this performance enhancement is achieved without inducing the model to suffer from catastrophic forgetting—a common challenge when models undergo fine-tuning.

Contributions and Theoretical Implications

- Enhanced Multimodal Understanding: The CCoT method systematically leverages intermediate SGs to provide richer contextual representations, improving the LMM's compositional and multimodal reasoning. This stands as a salient point against traditional end-to-end models that might overlook finer semantic relationships or compositional nuances present within visual scenes.

- Broad Applicability: Unlike methods requiring fine-tuning on domain-specific datasets, the suggestive zero-shot nature of CCoT promotes broadened applicability across different LMM architectures, showcasing its flexibility and computational efficiency.

- Structured Representation Advantage: The paper effectively argues for scene graphs over traditional image captioning. The hierarchical, structured nature of SGs captures more of the syntactic and semantic complexities present in real-world scenarios, aiding in the resolution of tasks involving intricate visual relationships.

Future Directions

The promising results from the Compositional Chain-of-Thought prompting introduce several avenues for future investigation. One potential direction is the exploration of expanding the SG generation prompts to incorporate even more dimensions of visual contextuality, such as temporal changes or more abstract conceptual mappings, thereby further enriching the information available to LMMs. Additionally, evaluating the scalability of CCoT across even larger and more diverse datasets could offer deeper insights into the robustness and adaptability of this methodology in more varied contexts.

Overall, the CCoT methodology provides a significant contribution to the field of visual reasoning and multimodal machine learning. It propounds an innovative, low-cost, and effective strategy for improving the compositional understanding of LMMs without the high computational and data annotation costs traditionally associated with such improvements.