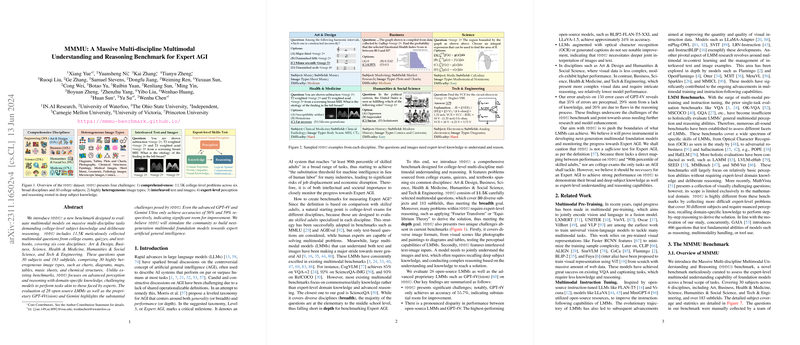

The Massive Multi-discipline Multimodal Understanding and Reasoning (MMMU) benchmark represents a significant advancement in evaluating the current capabilities of AGI, specifically in multimodal understanding and reasoning. MMMU challenges models with a series of comprehensive and difficult tasks reminiscent of college-level examinations encompassing six broad disciplines and thirty college subjects, categorically spanning 183 subfields. These disciplines include Arts & Design, Business, Science, Health & Medicine, Humanities & Social Science, and Tech & Engineering.

What sets MMMU apart is its extensive library of 11.5K questions meticulously collected from various academic materials. The inclusion of 30 highly diverse types of images—ranging from engineering blueprints to histological slides—reflects the benchmark's emphasis on deep multimodal engagement. The images are interwoven with text, requiring models to analyze and reason through a combination of visual and textual cues bound by domain expertise.

MMMU's goal is to test models' ability to achieve expert-level perception and advanced reasoning skills. This is observed in its evaluation of state-of-the-art LLMs and Large Multimodal Models (LMMs), such as OpenFlamingo and GPT-4, among others. The results from MMMU reveal significant challenges posed to even the most advanced models, like GPT-4Vision, which only achieved a 56% accuracy score. However, a closer examination of 150 mispredicted cases by GPT-4Vision shows that 35% of errors can be attributed to perceptual issues, 29% to a lack of domain knowledge, and 26% to reasoning process flaws, signaling specific areas for further research and model enhancement.

Furthermore, the benchmark demonstrates different performance across various disciplines. For example, in fields like Art & Design and Humanities & Social Science, where visual complexity is relatively manageable, models show higher performance compared to more complex domains like Business, Science, Health & Medicine, and Tech & Engineering. Unsurprisingly, open-source models lag behind proprietary iterations like GPT-4Vision, highlighting a disparity in the capabilities of multimodal understanding.

MMMU is not a definitive test for Expert AGI, as it does not yet encompass the range of tasks an AGI should handle, and it does not directly measure performance against the 90th percentile of skilled adults. Nevertheless, it serves as a crucial component in evaluating a model’s proficiency in domain-specific knowledge and expert-level reasoning and understanding.

In conclusion, MMMU represents a robust and challenging multimodal benchmark that pushes the boundaries of multimodal foundation models. Its unique approach in integrating diverse image types with text rooted in domain-specific questions sets a new precedent for AGI evaluation and will undoubtedly propel the AI community towards more profound advancements.