MedAgents: LLMs as Collaborators for Zero-shot Medical Reasoning

The exploration of LLMs in specialized domains, such as the medical field, presents inherent challenges due to the domain-specific terminologies and the requirement for complex reasoning. The paper "MedAgents: LLMs as Collaborators for Zero-shot Medical Reasoning" introduces an innovative approach, termed as Multi-disciplinary Collaboration (MC) framework, which leverages LLM-based agents in a role-playing scenario to augment their reasoning capabilities without the need for additional training. This structure is designed to mimic a collaborative, multi-round discussion among different domain experts, improving the capacity of LLMs in medical applications, especially for zero-shot scenarios.

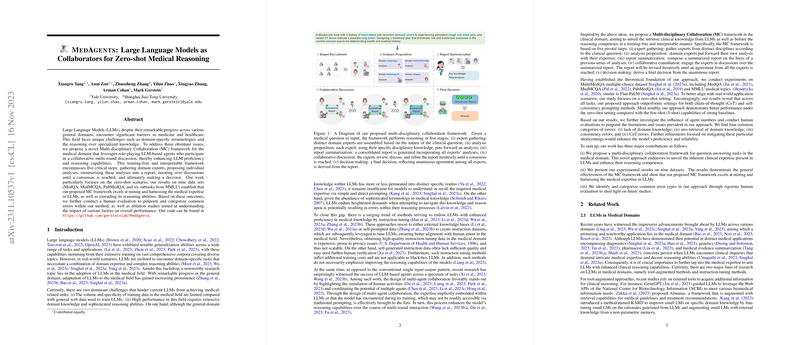

The MC framework is articulated through five critical stages:

- Expert Gathering: This involves assembling domain experts to provide multiple perspectives on the medical question at hand, enhancing the depth and breadth of analysis.

- Analysis Proposition: Expert agents propose individual analyses derived from their specialized knowledge, representing nuanced perspectives on the medical query.

- Report Summarization: The individual analyses are consolidated into a cohesive report, which serves as a basis for further discussion.

- Collaborative Consultation: Multiple rounds of discussion among agents refine the report until a consensus is reached, ensuring each expert's perspective is incorporated and vetted.

- Decision Making: The final, consensus-derived report forms the basis for answering the initial medical question.

Empirical evaluation on nine datasets, including MedQA, MedMCQA, and PubMedQA, demonstrates that this MC framework excels over existing zero-shot methods like chain-of-thought (CoT) and self-consistency (SC) prompting approaches. Notably, the MC framework achieves superior accuracy in the zero-shot setting compared to 5-shot baselines, showcasing its enhanced ability to mine and apply intrinsic medical knowledge embedded within LLMs.

In examining the configuration of the MC framework, a pivotal finding is the influence of role-playing on LLM performance. Each agent's domain-specific knowledge becomes pivotal in uncovering and debating the diverse aspects of medical queries, which is a sophisticated alternative to simple prompting. The MC framework addresses a significant drawback of LLMs: the typical inadequacy in handling tasks that demand specialized knowledge and reasoning beyond their general training.

The paper also elaborates on error analysis, categorizing common failure types into lack of domain knowledge, mis-retrieval of domain expertise, consistency issues, and chain-of-thought errors. It proposes refinements targeting these errors as a pathway to enhance model reliability in future iterations.

Overall, this paper posits that leveraging multidisciplinary collaboration among LLM-based agents can effectively uncover domain-specific knowledge, floodlighting paths for their application in real-world, training-agnostic medical reasoning tasks. This framework marks a significant step forward in optimizing LLMs for healthcare applications without necessitating vast specialized training, hinting at future research directions that could involve integrating hybrid models or optimized prompting strategies to further bolster the intrinsic utility and precision of LLMs in specialized domains.