GLiNER: A Generalist Model for Named Entity Recognition Using Bidirectional Transformer

The paper "GLiNER: Generalist Model for Named Entity Recognition using Bidirectional Transformer" introduces a novel approach for Named Entity Recognition (NER) leveraging bidirectional transformers. The GLiNER model aims to overcome limitations associated with traditional models and LLMs by providing a flexible, resource-efficient solution for extracting any type of entities from text.

Introduction and Motivation

Traditional NER systems are constrained by a predefined set of entity types, which limits their applicability in diverse domains. The emergence of LLMs like GPT-3 and ChatGPT has enabled more versatile entity extraction through natural language instructions. However, these LLMs require substantial computational resources and can be costly, making them impractical for some applications. The authors aim to address these issues by developing a compact NER model capable of identifying any entity type. GLiNER leverages a bidirectional transformer encoder to facilitate parallel entity extraction, a significant improvement over the sequential token generation methods used by LLMs.

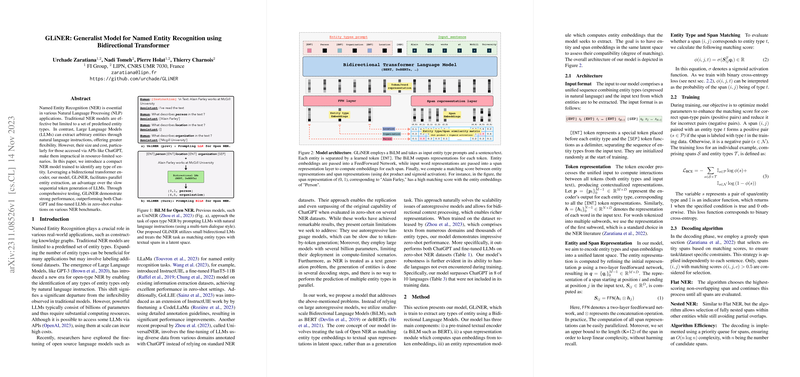

Model Architecture and Methodology

The proposed GLiNER model is constructed using a Bidirectional LLM (BiLM) and focuses on matching entity type embeddings with textual span representations in a latent space. The architecture consists of three main components:

- Textual Encoder: Utilizes BiLMs like BERT or deBERTa for processing the input text along with entity type prompts.

- Span Representation Module: Computes span embeddings from token embeddings.

- Entity Representation Module: Computes entity embeddings, allowing the model to assess the compatibility between spans and entity types.

The overall architecture enables GLiNER to process entity types and spans in parallel, enhancing the model's efficiency and scalability. By training on a diverse set of texts and entity types from the Pile-NER dataset, GLiNER demonstrates strong zero-shot performance across multiple NER benchmarks.

Key Results

The authors conduct a comprehensive evaluation of GLiNER against several baselines, including ChatGPT, InstructUIE, and UniNER. The results reveal that GLiNER outperforms these models in various zero-shot settings, highlighting its robustness and adaptability. Specifically, the model shows significant improvements in precision, recall, and F1-score on out-of-domain (OOD) NER benchmarks and 20 standard NER datasets. Notably, the model's performance does not degrade significantly when handling languages that were not included in its training data, achieving superior results in 8 out of 10 evaluated languages.

Analysis and Discussion

The paper also explores the effects of negative entity sampling and entity type dropping during training, demonstrating their importance in achieving balanced precision and recall. Furthermore, the authors explore the impact of different BiLM backbones on the model's performance, confirming the dominance of deBERTa-v3 in this context.

Practical and Theoretical Implications

The GLiNER model presents several practical advantages:

- Efficiency: Smaller size and parallel processing make it more suitable for deployment in resource-constrained environments compared to LLMs.

- Flexibility: Ability to generalize across various domains and languages without requiring extensive fine-tuning.

- Cost-effectiveness: Reduces the computational costs associated with using large-scale models like GPT-3 or ChatGPT.

Theoretically, GLiNER advances the understanding of leveraging BiLMs for NER and highlights the benefits of matching entity types with textual spans in latent space. This approach opens new avenues for developing efficient, scalable NER models capable of performing well in diverse applications.

Future Directions

Future work could focus on further optimizing GLiNER's architecture for enhanced performance and exploring additional pretraining datasets to boost its capabilities in low-resource languages. The integration of domain-specific knowledge and adaptive learning mechanisms could also be investigated to improve the model's generalizability and accuracy.

Overall, the GLiNER model represents a significant step forward in the field of NER, offering a practical, efficient, and adaptable solution for extracting entities from text across various domains and applications.