The paper introduces Distributed Low-Communication (DiLoCo) training, a distributed optimization algorithm designed for training LLMs on multiple, poorly connected clusters of devices. DiLoCo is presented as a solution to challenges associated with traditional LLM training, which requires a large number of tightly interconnected accelerators and presents engineering and infrastructure difficulties. DiLoCo is a variant of federated averaging, where the number of inner steps is large, the inner optimizer is AdamW, and the outer optimizer is Nesterov momentum.

The authors address the difficulties of co-locating and tightly synchronizing a large number of accelerators by drawing inspiration from Federated Learning. The core idea involves workers operating on their own "island" of devices, each consuming a partition of the data and updating a model replica. These workers perform local computations and exchange gradients every steps to synchronize their model replicas. The paper posits that DiLoCo addresses shortcomings by:

- Reducing the number of co-located devices required for each worker.

- Minimizing communication frequency between workers.

- Accommodating heterogeneous devices across different islands.

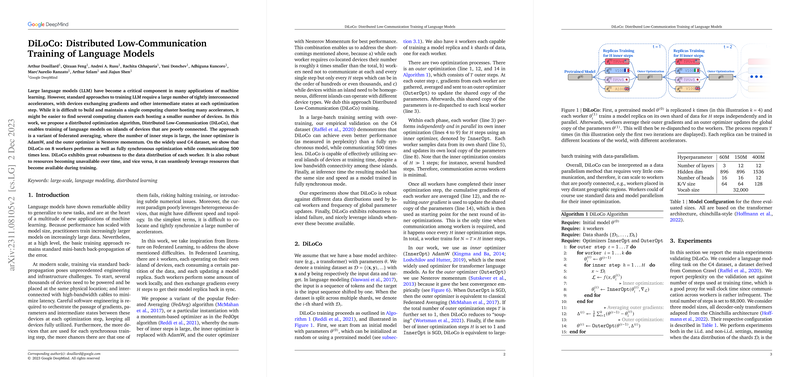

The paper details the DiLoCo algorithm, which involves outer and inner optimization processes. The outer optimization (lines 1, 12, and 14 in Algorithm 1) consists of outer steps where gradients from each worker are gathered, averaged, and used by an outer optimizer to update a shared copy of the parameters. This shared copy is then re-dispatched to each local worker (line 3). Within each phase, each worker (line 3) performs its own inner optimization (lines 4 to 9) for steps using an inner optimizer. Each worker samples data from its own shard (line 5) and updates its own local copy of the parameters (line 8). The inner optimization consists of steps. Communication across workers is minimal, occurring once every inner optimization steps. In total, a worker trains for inner steps.

Specifically, the inner optimizer () is AdamW and the outer optimizer () is Nesterov momentum. When is SGD, the outer optimizer is equivalent to classical Federated Averaging. If the total number of outer optimization steps is further set to 1, then DiLoCo reduces to "souping". Finally, if the number of inner optimization steps is set to 1 and is SGD, DiLoCo is equivalent to large-batch training with data-parallelism.

The paper highlights that DiLoCo can be interpreted as a data parallelism method requiring very little communication, scaling to workers that are poorly connected, such as those in distant geographic regions.

The paper presents an empirical validation of DiLoCo on the C4 dataset. Three model sizes were considered, all decoder-only transformers adapted from the Chinchilla architecture. Experiments were conducted in both i.i.d. and non-i.i.d. settings. By default, training experiments start from a transformer LLM pretrained for steps on the same training set. A sequence length of tokens and a batch size of $512$ were used.

The performance of DiLoCo (with replicas in the non-i.i.d. data setting) was evaluated with each worker performing times inner steps ( steps in total), starting from a model pretrained for steps. This setup was compared against four baselines:

- A model trained from scratch for steps.

- A model pretrained for steps and finetuned for an additional steps.

- A model pretrained for steps and finetuned with an larger batch size.

- A model trained with the standard batch size for the number of updates.

The trade-offs between these baselines and DiLoCo were compared with respect to communication cost, training time, and the amount of compute used. The results indicated that DiLoCo does not increase training time, communicates less than the second baseline, and achieves better generalization performance.

Extensive ablations were performed to understand DiLoCo's capabilities and limitations.

- Number of Pretraining Steps: The impact of the number of pretraining steps on final generalization performance in a non-i.i.d. data regime was examined. The number of pretraining steps was varied, and it was observed that starting DiLoCo before 24k steps achieves a similar final perplexity, demonstrating the robustness of the approach. Performance was not degraded even when starting from a randomly initialized network.

- Communication Frequency: The communication frequency was varied for a 150M transformer in the non-i.i.d. data regime, from steps to steps. More frequent communication generally improved generalization performance. Communicating more frequently than steps led to diminishing returns, with only a mild performance degradation up to steps. Based on these considerations, was chosen as a trade-off between generalization performance and communication cost.

- i.i.d. vs non-i.i.d. data regimes: The effect of different data distributions on the convergence of DiLoCo was assessed. The non-i.i.d. setting was created by clustering the entire training set using -Means on the pretrained model's last layer features. DiLoCo with workers/shards was compared in non-i.i.d. and i.i.d. settings. Despite faster early convergence in the i.i.d. setting, the final generalization performance was comparable, demonstrating DiLoCo's robustness.

- Number of replicas: The impact of the number of replicas/clusters was investigated. Increasing the number of replicas improved generalization performance, but with diminishing returns beyond 8 workers. This applied to both i.i.d. and non-i.i.d. settings.

- Model size: Models of size 60, 150, and 400 million parameters were trained with data distribution as non-i.i.d. and all workers starting from a model pretrained for steps. A monotonic improvement of performance was observed as the model size increased.

- Outer Optimizers: Various outer optimizers were tested, including SGD, Adam, and Nesterov momentum. Nesterov optimizer performed the best. The setting with outer learning rate equal to $0.7$ and outer momentum equal to $0.9$ was found to be very robust.

- Adaptive compute pool: The performance of DiLoCo was explored when the amount of compute varied throughout training, simulating scenarios with preemptible machines or collaborative systems. The amount of compute was varied by changing the number of replicas used in an i.i.d. setting. The determining factor for the model's generalization ability was the total amount of compute given to DiLoCo, with robustness to how the budget was spread over time.

- Asynchronous Communication: The inability to communicate, simulating worker reboots or network issues, was modeled by randomly dropping outer gradients with varying probabilities. Higher drop probabilities resulted in more unstable learning with transient spikes in perplexity. However, even with a 50% drop probability in the non-i.i.d. setting, the degradation of perplexity was only 2.1%.

- Accelerating a single worker: DiLoCo applied to a single replica/cluster ( but ) improved both convergence speed and final generalization performance at null communication cost. Every inner steps, the only outer gradient was computed and the parameters were updated locally using the outer optimizer.

The paper also discusses related work in distributed learning, specifically local SGD and federated learning, and linear mode connectivity. It contrasts DiLoCo with existing approaches, highlighting its unique combination of techniques and its ability to scale to larger models and more diverse settings.

The paper concludes by outlining limitations of the paper and potential avenues for future research. These include:

- Evaluating DiLoCo on other tasks and architectures.

- Scaling DiLoCo to models with billions of parameters.

- Extending DiLoCo to asynchronous settings with heterogeneous workers.

- Improving the algorithm to better leverage additional compute.

- Balancing wall-clock time efficiency with compute and data efficiency.