Summary of "In-context Learning Generalizes, But Not Always Robustly: The Case of Syntax"

The paper "In-context Learning Generalizes, But Not Always Robustly: The Case of Syntax" presents a critical examination of the robustness of in-context learning (ICL) in LLMs, focusing on their ability to handle syntactic transformations. The paper investigates whether LLMs, when supervised via ICL, are capable of understanding and generalizing the underlying structure of their tasks or whether they rely on superficial heuristics inadequate for out-of-distribution scenarios.

Key Contributions

- Investigation of Syntactic Generalization: The paper explores the extent to which LLMs can generalize syntactic structures by testing on syntactic transformation tasks. These tasks require accurate recognition and application of syntactic rules to reorder or reformulate sentences without relying solely on positional cues.

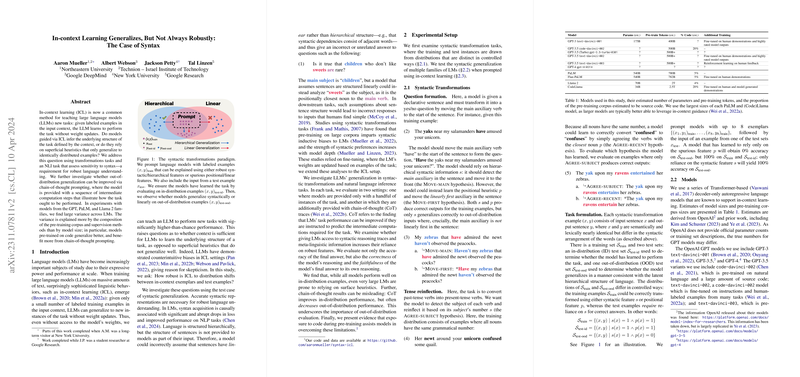

- Experimental Framework: The authors conducted their experiments across models from the GPT, PaLM, and Llama 2 families, focusing on two syntactic transformations: question formation and tense reinflection. These experiments assessed the models' ability to generalize both to in-distribution (ID) and out-of-distribution (OOD) examples.

- Analysis of Model Variability: A striking finding of the paper is the variance in performance across models regarding syntactic tasks, which was attributed more to the pre-training data composition and supervision methods rather than model size. Notably, models pre-trained on code were found to generalize better in OOD settings.

- Chain-of-Thought Prompting: The paper examines whether chain-of-thought (CoT) prompting—where models are given intermediate computational steps—can enhance the generalization capabilities of LLMs. Evidence suggests that while CoT can improve in-distribution task performance, its effectiveness varies widely across models for OOD generalization.

- Impact of Code Pre-training: The research provides evidence suggesting that pre-training on code can be beneficial for syntactic understanding and generalization, highlighting GPT-3.5 code-davinci-002's superior performance in leveraging ICL.

- Effects of RLHF: The paper observes that Reinforcement Learning from Human Feedback (RLHF) may at times impair OOD generalization despite being useful for certain in-distribution tasks, suggesting a nuanced impact of RLHF on model robustness.

Implications and Future Directions

The findings of this paper have important implications for the development and application of LLMs. The reliance on superficial heuristics by some models suggests potential avenues for improving model architecture or training regimens to bolster syntactic reasoning. The benefits of code pre-training underscore the potential for interdisciplinary methods to enhance LLM training. Furthermore, the mixed effects of CoT and RLHF call for careful consideration of these techniques in LLM optimization.

Future work could expand on this research by exploring the causal mechanisms underlying the observed effects of code pre-training and RLHF on model performance. Moreover, extending the investigation to other syntactic phenomena and conducting ablation studies on training data could provide deeper insights into the inherent biases and limitations of LLMs when guided by ICL.

In summary, this paper critically demonstrates that while LLMs display remarkable capabilities across a range of NLP tasks, their robustness in syntax-sensitive tasks remains uneven, prompting further investigation into training paradigms and prompting strategies to enhance generalization capabilities.