Black-Box Prompt Optimization: Aligning LLMs Without Training

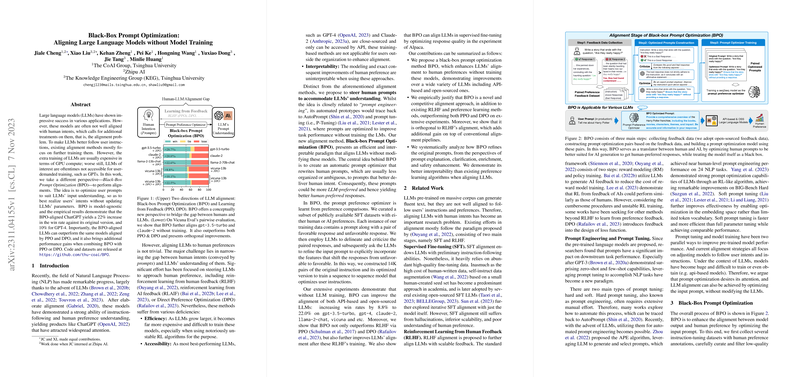

The paper presents an innovative approach to enhancing the alignment of LLMs with human intent through Black-Box Prompt Optimization (BPO). This technique bypasses the resource-intensive and often inaccessible process of retraining models, offering a pragmatic solution for aligning LLMs to better follow user instructions without altering their parameters.

Key Contributions

- Model-Agnostic Prompt Optimization: BPO capitalizes on the LLMs' existing capabilities by fine-tuning the input prompts themselves. Unlike traditional alignment techniques such as Reinforcement Learning from Human Feedback (RLHF), which require additional training, BPO optimizes prompts to better convey user intent to the models.

- Empirical Success: The paper reveals that BPO significantly enhances model outputs, with a 22% increase in win rates for ChatGPT and a 10% increase for GPT-4. This demonstrates the efficacy of prompt optimization in improving response alignment without additional model training.

- Comparative Superiority: BPO not only surpasses traditional alignment methods such as Proximal Policy Optimization (PPO) and Direct Preference Optimization (DPO) but also shows that it can be combined with these methods for additional gains, highlighting its versatility.

- Transparent and Efficient: The approach offers better interpretability compared to existing methods by allowing direct visualization of input-output changes through prompt adjustments. It avoids the high costs and complexities associated with model retraining.

Practical Implications

BPO provides an effective alternative for developers and researchers working with black-box LLMs. It enables alignment with user preferences despite the proprietary nature of models like GPT-4, thus expanding accessibility beyond large organizations. Moreover, its efficiency in terms of computational and time resources makes it a viable option for rapid deployment in production environments.

Theoretical Implications and Future Directions

From a theoretical standpoint, BPO challenges the current paradigm of model-centric alignment strategies by shifting focus to input optimization. This opens avenues for research on optimizing prompts in various contexts, such as multilingual and domain-specific applications. Future work may investigate integrating BPO with other emerging prompt engineering techniques, exploring iterations that maintain coherence and adherence to user intent.

Conclusion

The paper advances the field of LLM alignment with a novel methodology that presents a practical and resource-efficient way to bridge the gap between user intent and model response. By leveraging BPO, the paper provides a pathway for enhancing model usability and performance without the burdens of retraining, offering valuable insights and tools for AI practitioners and researchers.