Evaluating Theory of Mind in LLMs with FANToM

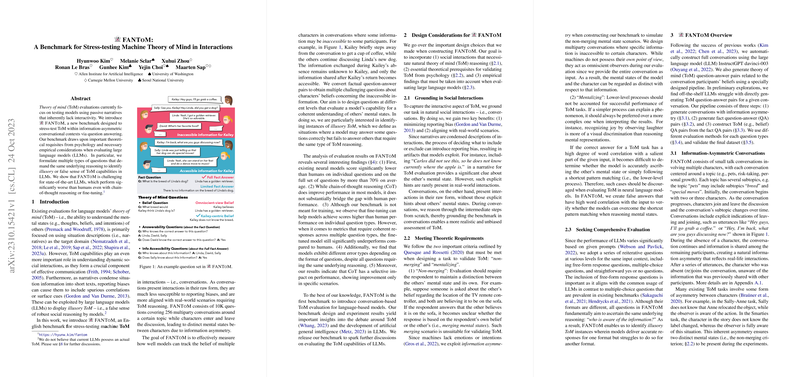

The paper "FANToM: A Benchmark for Stress-testing Machine Theory of Mind in Interactions" introduces a novel benchmark to evaluate Theory of Mind (ToM) capabilities in LLMs through the lens of interactive conversational contexts. In contrast to existing ToM evaluation methods which predominantly use passive narratives, FANToM is designed to assess ToM reasoning in real-time multiparty conversations characterized by information asymmetry.

ToM in this context refers to the ability to understand and infer the mental states of others, which is a critical component for effective communication. The benchmark evaluates models' proficiency in tracking distinct mental states of characters engaged in dynamic discussions and aims to identify any illusory ToM, i.e., a false sense of robust social reasoning exhibited by these models.

Design and Implementation of FANToM

FANToM is constructed to mitigate the shortcomings of narrative-based ToM evaluations which often include unintentional biases and spurious correlations that LLMs can exploit. The benchmark encompasses 256 conversations, composed of 10,000 questions, each designed to probe the models' understanding of characters' beliefs and mental states. These conversations feature characters entering and leaving the discussion, leading to varied levels of information accessibility and thus distinct mental states among participants.

The benchmark includes six types of questions derived from factual question-answer pairs, transformed into belief question-answer pairs, and encompasses both free-form response and multiple-choice formats to comprehensively assess models' reasoning capabilities. The evaluation focuses on:

- Belief Questions: Require models to infer what a specific character believes about a piece of information.

- Answerability and InfoAccess Questions: Test models' ability to determine which characters possess knowledge of certain information.

Experimental Findings

The paper's results reveal that current state-of-the-art LLMs significantly underperform compared to humans in ToM tasks within FANToM. The key findings include:

- Performance Gap: Models exhibit a substantial performance gap compared to human benchmarks in ToM reasoning tasks. Neural models scored lower than human participants by an average of over 70%.

- Chain-of-Thought Reasoning: While chain-of-thought (CoT) reasoning provides some performance improvements, it fails to bridge the gap with human performance fully. Specific error patterns suggest that CoT selectively improves reasoning about characters unaware of certain information but does not substantially aid in identifying those who are aware.

- Fine-tuning: Although fine-tuning models on FANToM-related tasks improves scores on individual question types, models still do not achieve consistency across multiple ToM question types, indicating that coherent ToM reasoning remains a challenge.

- Order of ToM Beliefs: Models generally perform better on questions involving second-order beliefs than first-order ones. However, cyclic second-order belief questions are easier for models compared to acyclic ones involving multiple characters.

- Information Overload: Models provided with the full conversation context perform worse than those with access to the short conversation context, highlighting the additional difficulty in identifying relevant information from extended texts.

Implications and Future Directions

The introduction of FANToM has significant implications for the paper and development of artificial general intelligence (AGI) in LLMs. The results indicate that current LLMs do not possess coherent and robust ToM capabilities, contrary to some claims in public and academic discourse. This benchmark highlights the necessity of developing more sophisticated models capable of nuanced social reasoning, which is crucial for applications involving human-AI interaction.

Future research could explore integrating pragmatic reasoning, multimodal information, and belief graphs into LLMs to enhance ToM capabilities. Additionally, addressing the reporting biases that LLMs exhibit by leveraging interactive learning and grounding reasoning in real-world scenarios can be another viable direction. FANToM sets a high bar for what is required to robustly assess and improve ToM in LLMs, contributing to the long-term goal of achieving AGI.

Conclusion

FANToM represents a significant advancement in evaluating LLMs' understanding of human mental states in conversational interactions. By addressing the limitations of previous narrative-based evaluations and introducing a comprehensive set of challenging ToM tasks, FANToM provides a more realistic assessment of models' social reasoning capabilities. The insights from this benchmark highlight the current gaps and pave the way for future developments in AI equipped with true ToM reasoning. The dataset and benchmark are publicly available to encourage further research and development in this critical area.