Cross-Lingual Evaluation of LLMs for Healthcare: A Focused Analysis

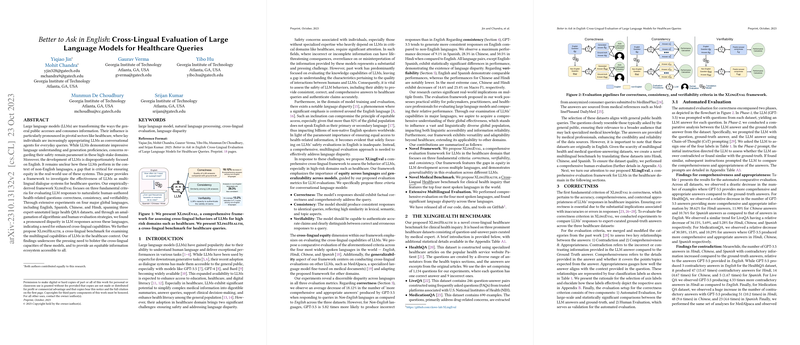

The paper "Better to Ask in English: Cross-Lingual Evaluation of LLMs for Healthcare Queries" investigates the cross-lingual performance of LLMs in healthcare, an area both intricate and pivotal due to the consequences of misinformation. The researchers develop a framework named XLingEval to systematically evaluate the abilities of LLMs as multi-lingual dialogue systems in the healthcare context, introducing XLingHealth as a cross-lingual benchmark. This analysis spans four prominent languages: English, Spanish, Chinese, and Hindi, through which they aim to expose language disparities and recommend areas for enhancement.

Key Contributions

- Framework and Metrics: The paper proposes the XLingEval framework which assesses responses based on correctness, consistency, and verifiability—criteria critical for safe deployment of LLMs in healthcare. This structured approach highlights areas where current LLMs, particularly in non-English languages, fall short when evaluated for health-related inquiries.

- Cross-Lingual Benchmark: XLingHealth emerges as a novel multilingual benchmark composed of three datasets: HealthQA, LiveQA, and MedicationQA. This benchmark stands out as a resource for evaluating the cross-lingual capabilities of LLMs tailored to healthcare, owing to the use of both algorithmic and human-evaluation strategies.

- Evaluation Results: Experimental results demonstrate a significant disparity in LLM response quality across languages. English generally yielded more comprehensive and consistent responses compared to the other languages. Notably, Hindi and Chinese exhibited the greatest performance deficits, both in terms of correctness and consistency metrics, indicative of underlying language biases in LLM models.

- Language Disparity Insights: The exploration brings forth critical insights on language disparity—the disproportionately high quality of English responses versus those in non-English languages. This disparity underscores the necessity for further attention to training and evaluating LLMs with a substantial and balanced multilingual dataset.

Implications of Research Findings

The implications of this research are substantial for theoretical and practical dimensions of AI deployment in healthcare. Theoretically, this paper illuminates gaps in the current understanding of LLM behavior across languages, potentially stimulating further research into equitable LLM development. Practically, it suggests routes for enhancing LLM deployment protocols to ensure that non-English speaking users receive reliable healthcare information, emphasizing the need for more inclusive training datasets and comprehensive multilingual evaluations.

Speculations on Future Developments in AI

Given the pressing need highlighted by this paper for equitable information access in healthcare, future developments in AI might increasingly focus on reducing language disparities. We might see the evolution of more sophisticated cross-lingual training methodologies or the integration of real-time translation features, potentially bridging current gaps. Additionally, the frameworks established in this paper, such as XLingEval, could spur analogous frameworks in other high-stake industries like finance or legal that face similar challenges.

Through its systemic evaluation framework, comprehensive multilingual benchmark, and empirical findings, this paper equips researchers with foundational insights and tools for future advancements in the multilingual capabilities of LLMs, ultimately paving the way for more inclusive and equitable AI technologies.