H2O Open Ecosystem for State-of-the-Art LLMs

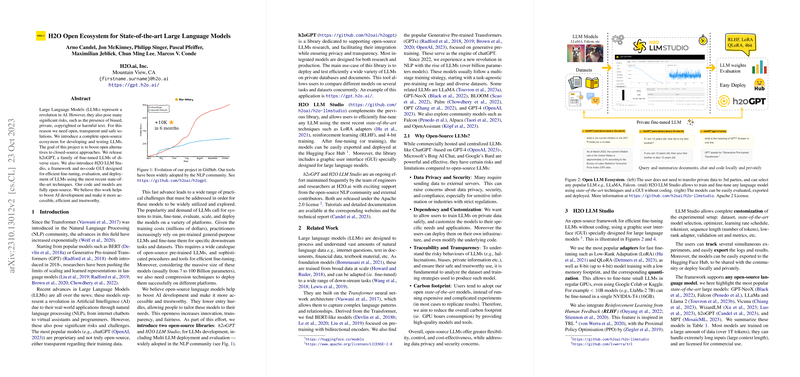

The discussed paper presents an open-source ecosystem designed for the development, fine-tuning, evaluation, and deployment of LLMs. It introduces "h2oGPT," a series of fine-tuned LLMs, and "H2O LLM Studio," a GUI-based framework for efficient LLM fine-tuning and deployment. This ecosystem aims to provide an open, accessible alternative to proprietary LLM systems, emphasizing transparency, privacy, and flexibility for practitioners leveraging these AI tools.

Overview

Recent years have seen phenomenal advancements in LLMs, sparked by foundational models such as BERT and GPT, which have dramatically shifted the landscape of NLP applications. LLMs, which have made their way into various real-world implementations like chatbots and virtual personal assistants, face scrutiny due to their often-proprietary nature and potential risks such as privacy breaches and inherent biases.

The paper argues for the necessity of open-source solutions to counter these limitations, asserting that open models can foster accessibility, customization, and transparency. Within this context, the authors introduce their H2O.ai-backed initiatives: the h2oGPT library and H2O LLM Studio.

h2oGPT Library

The h2oGPT library accommodates a variety of pre-trained LLMs and is designed for straightforward integration to bolster privacy and transparency. It allows efficient deployment and testing of multiple models on private datasets, facilitating model comparisons across diverse NLP tasks. Consequently, it serves both research-oriented and production-level use cases, offering a comprehensive platform for performance measurement and adaptation to domain-specific requirements.

H2O LLM Studio

H2O LLM Studio is a no-code platform enabling fine-tuning of LLMs with state-of-the-art techniques such as LoRA adapters and reinforcement learning from human feedback. The studio supports 8-bit to 4-bit model quantization, accommodating resource constraints typical in consumer-grade hardware. Its GUI enhances usability, a crucial factor for researchers and developers who might lack extensive coding expertise.

The platform’s compatibility spans various emerging LLMs, including LLaMA, Mistral, and Falcon, demonstrating its adaptation to contemporary research paradigms and community-driven models. Such flexibility suggests significant influence on practical AI deployment and experimentation in diverse industrial and academic settings.

Implications and Future Directions

The paper highlights the importance of open-source LLMs for enhancing innovation and fairness, urging the AI community to leverage these models responsibly and ethically. h2oGPT and H2O LLM Studio serve as vital contributions to decreasing barriers in LLM utilization, offering robust alternatives to their proprietary counterparts by prioritizing user control, security, and environmental sustainability through carbon footprint considerations.

Future enhancements proposed by the authors include model quantization advancements, long-context processing, and multilingual/multimodal capabilities, which would further bolster the practicality and adaptability of the ecosystem for an ever-expanding array of applications.

In conclusion, this work addresses critical transparency and accessibility issues within the LLM sector, presenting meaningful strides towards open, collaborative AI developments. These initiatives appear poised to influence both theoretical explorations and practical implementations, driving further innovations in AI model development, deployment, and ethical use.