ReMax: Enhancing Reinforcement Learning from Human Feedback with Efficiency and Simplification

Introduction

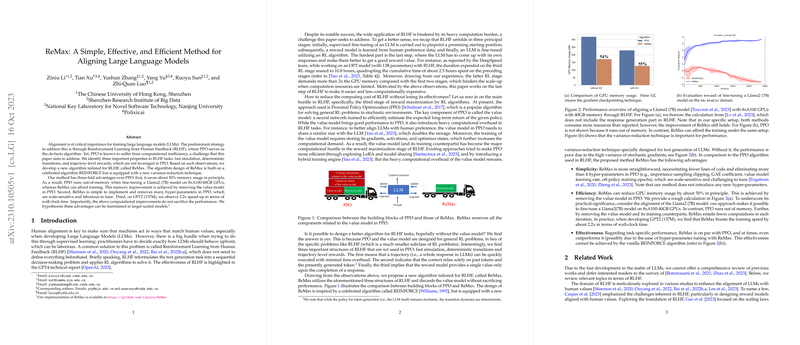

In the field of NLP, aligning LLMs with human values and preferences is of paramount importance for a variety of practical applications. The majority of current methods leverage Reinforcement Learning from Human Feedback (RLHF), with Proximal Policy Optimization (PPO) dominating this space. However, PPO's inefficient computational requirements pose significant challenges, especially in the context of fine-tuning LLMs. To address these issues, this paper proposes a novel algorithm, ReMax, built upon the foundations of the REINFORCE algorithm but augmented with an innovative variance-reduction technique. ReMax aims to simplify the implementation process, reduce memory consumption, and accelerate training sessions without sacrificing task performance.

Drawbacks of PPO in RLHF

The authors begin their discussion by pinpointing the limitations of PPO in the RLHF framework, highlighting its computational inefficiency, the complexity in hyper-parameter tuning, and excessive memory consumption. By scrutinizing the distinctive characteristics of RLHF tasks, such as fast simulation, deterministic transitions, and trajectory-level rewards, the paper persuasively argues that these features are not effectively utilized by PPO.

The ReMax Algorithm

ReMax is introduced as a solution that capitalizes on the observed properties of RLHF tasks. The integration of a new variance-reduction technique, tailored for LLMs within the REINFORCE algorithm framework, marks ReMax as a novel approach in this domain. This technique notably diminishes the variance of stochastic gradients, a critical factor that previously hampered the effectiveness of simple methods like REINFORCE in achieving high-performance outcomes in RLHF tasks.

Theoretical and Practical Advantages of ReMax

ReMax exhibits several theoretical and empirical advantages over PPO:

- Simplicity and Efficiency: ReMax's implementation is not only significantly simpler but also efficient. It manages to reduce GPU memory usage by approximately 50%, thereby enabling the training of larger models or utilizing larger batch sizes for increased throughput.

- Reduced Parameter Tuning: The simplification extends to its configuration, where ReMax eliminates the need to fine-tune multiple hyper-parameters associated with PPO.

- Speed: Without the overhead of training a value model, characteristic of PPO, ReMax offers a considerable reduction in wall-clock time per iteration.

- Task Performance: In terms of tasks specific performance metrics, ReMax matches or surpasses PPO, attributed partly to the simplified tuning process.

Experimental Validation

Experimental results provide concrete evidence supporting ReMax’s superiority in aligning LLMs with human preferences. Using the DeepSpeed-Chat framework and the full-hh-rlhf dataset, ReMax demonstrated not only stability in training dynamics but also a significant speed-up compared to PPO. Additionally, response quality analyses across various metrics further validated ReMax's effectiveness. Notably, comparisons conducted on the AlpacaEval dataset, as judged by GPT-4, underscored ReMax's superior performance over existing baselines, including PPO and SFT.

Future Perspectives and Limitations

While ReMax's introduction is a step forward in the efficient alignment of LLMs through RLHF, it opens up avenues for further refinement. The method's dependency on an additional response for gradient estimation illustrates a potential area for optimization. Future research could explore enhancing the variance-reduction technique or extending ReMax's applicability to traditional NLP tasks beyond RLHF.

Conclusion

Through rigorous analysis and empirical validation, ReMax is posited as a highly efficient, simpler, and effective method for aligning LLMs with human values through RLHF. Its design not only addresses the computational limitations and complexities associated with PPO but also sets a new standard for future developments in the domain of RLHF. As the journey towards perfecting LLM alignment continues, ReMax stands out as a promising candidate, pushing the boundaries of what is achievable with current technological capabilities.