An In-Depth Analysis of InstructTODS for Task-Oriented Dialogue Systems

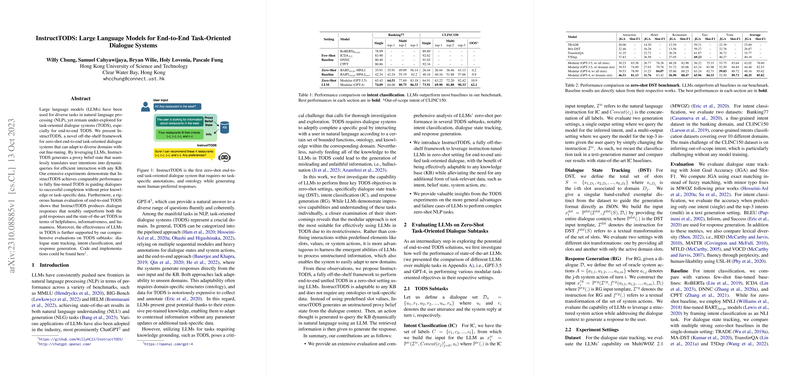

The landscape of task-oriented dialogue systems (TODS) has predominantly revolved around modular and end-to-end approaches, each with inherent limitations regarding adaptability and domain specificity. In light of the expansive potential held by LLMs, such as GPT-3.5 and GPT-4, for various NLP tasks, InstructTODS offers a novel, zero-shot framework for integrating LLMs into end-to-end TODS without necessitating task-specific fine-tuning or structured ontologies.

Overview and Methodology

InstructTODS utilizes LLMs to generate dialogue responses that effectively fulfill task-oriented objectives by leveraging a concept termed a "proxy belief state." This mechanism interprets user intentions into dynamic queries, thereby facilitating seamless interaction with any knowledge base (KB). InstructTODS bypasses traditional domain constraints by avoiding reliance on domain-specific annotations or ontologies, instead drawing on LLMs’ capacity to interpret and generate responses from unstructured data.

The architecture of InstructTODS incorporates the following key components:

- Proxy Belief State: Captures the user's intent from the dialogue context without predefined slots or ontologies.

- Action Thought and KB Interaction: Uses dynamic, natural language queries to interact with a KB, circumventing LLM hallucinations and generating informed responses using real-time knowledge retrieval.

- End-to-End Response Generation: Through these interactions, LLMs produce responses aligned with user goals and conversational intent, drawing from the KB interaction outputs.

Empirical Validation and Results

The framework's evaluation against established dialogue benchmarks, specifically the MultiWOZ 2.1 dataset, demonstrates that InstructTODS achieves task completion rates on par with fully fine-tuned systems. Notably, it surpasses them in human evaluative metrics such as informativeness, helpfulness, and humanness. These findings indicate that LLMs can generate more human-like and informative responses compared to gold-standard or fine-tuned machine responses.

Implications and Future Developments

The implications of InstructTODS for practical applications are substantial. By eliminating the dependency on costly and labor-intensive data annotations and domain-specific ontologies, this framework democratizes the development and deployment of TODS across new, previously unsupported domains. The zero-shot adaptability offered by InstructTODS opens avenues for real-time application improvements and broader domain support without reconfiguring system parameters.

Theoretically, the framework challenges existing paradigms in dialogue system design, suggesting potential expansion of LLM capabilities beyond traditional understanding and generation tasks to include interactive problem-solving and decision-making applications.

Limitations and Challenges

Despite these advancements, InstructTODS encounters challenges in multi-domain settings, where overlapping domain data can confound LLMs. Ensuring accurate information retrieval and minimizing hallucinations remain focal areas for ongoing research. Furthermore, the expansion of LLM frameworks to other languages and the generalization across various dialogue systems warrants further exploration and refinement.

Conclusion

InstructTODS presents a forward-thinking approach that reflects a significant step toward optimizing task-oriented dialogue systems with LLM technology, bringing into question traditional constructs of TODS by demonstrating that sophisticated conversation management and response generation can be either modularly or fully end-to-end rendered without extensive domain-specific configuration. The long-term outlook for LLM-supported dialogue systems remains positive, with InstructTODS paving the way for increasingly sophisticated, adaptable, and domain-agnostic dialogue solutions.