Enhancing LLM Inference Efficiency through Context Compression

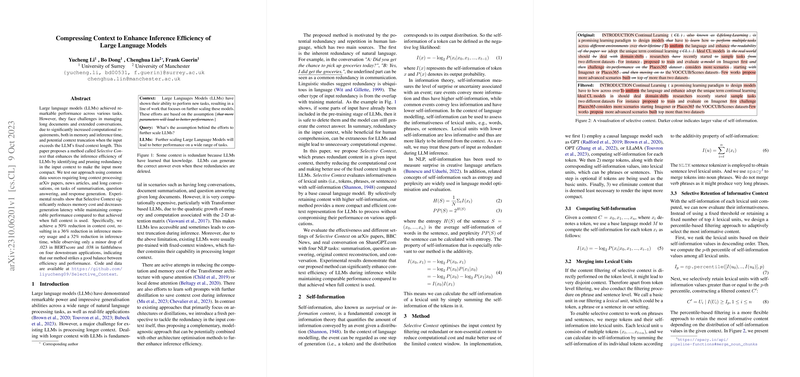

The paper "Compressing Context to Enhance Inference Efficiency of LLMs" presents Selective Context, a method aimed at improving the efficiency of LLMs when processing long documents and sustained conversations. The authors address the challenge of managing extended contexts, which can strain computational resources and lead to context truncation due to fixed input lengths.

Core Contributions

Selective Context is proposed as a novel approach to prune redundancy in the input context, ensuring more efficient use of fixed context windows in LLMs. Key features of this method include:

- Redundancy Identification and Pruning: The approach involves assessing the informativeness of lexical units within the input context, using self-information metrics based on smaller base causal models. Redundant data is systematically removed to make the input more compact.

- Experimental Evaluation: The method was tested on diverse data sources requiring long contexts, such as arXiv papers and lengthy news articles. Tasks such as summarization, question answering, and conversation were employed to gauge its effectiveness.

Results and Performance

The experiments reveal that Selective Context achieves notable memory cost reduction and latency decrease, maintaining performance levels akin to using the full context:

- A 50% reduction in context cost resulted in a 36% decrease in memory usage and a 32% reduction in inference time.

- There was only a minor performance drop of .023 in BERTScore and .038 in faithfulness across four downstream tasks.

This balance between efficiency and performance illustrates the potential for practical application without sacrificing output quality.

Theoretical and Practical Implications

This paper introduces a model-agnostic, complementary perspective on enhancing LLM efficiency. Unlike narrow architectural optimizations like sparse or local attention, Selective Context can be integrated with existing methods to optimize model inference even further.

Methodological Insights:

- Self-Information Utilization: By leveraging self-information, which quantifies informativeness, the method effectively identifies and retains only the most pertinent parts of the context.

- Adaptive Filtering: The application of a percentile-based filtering mechanism allows for dynamic content retention based on the distribution of self-information values, ensuring flexibility across diverse contexts.

Future Directions

The research opens avenues for exploring more granular lexical unit refinement techniques, potentially enhancing the procedure's accuracy. Additionally, the integration of context compression with other efficiency-focused strategies could yield further improvements.

The research showcases the utility of efficiently managing context to reduce computational demands in real-world applications, extending the practicality of LLMs in environments demanding the processing of extensive and complex datasets. The publication of the code and data supports replication and further investigation, contributing to ongoing developments in AI efficiency optimization.