- The paper presents the ELM framework that leverages LLMs to translate dense vector embeddings into natural language descriptions.

- The model uses adapter layers and a two-stage training process to map domain-specific embeddings into the LLM's token space.

- Experimental results on the MovieLens dataset show high semantic and behavioral consistency, underscoring its practical applications.

Demystifying Embedding Spaces using LLMs

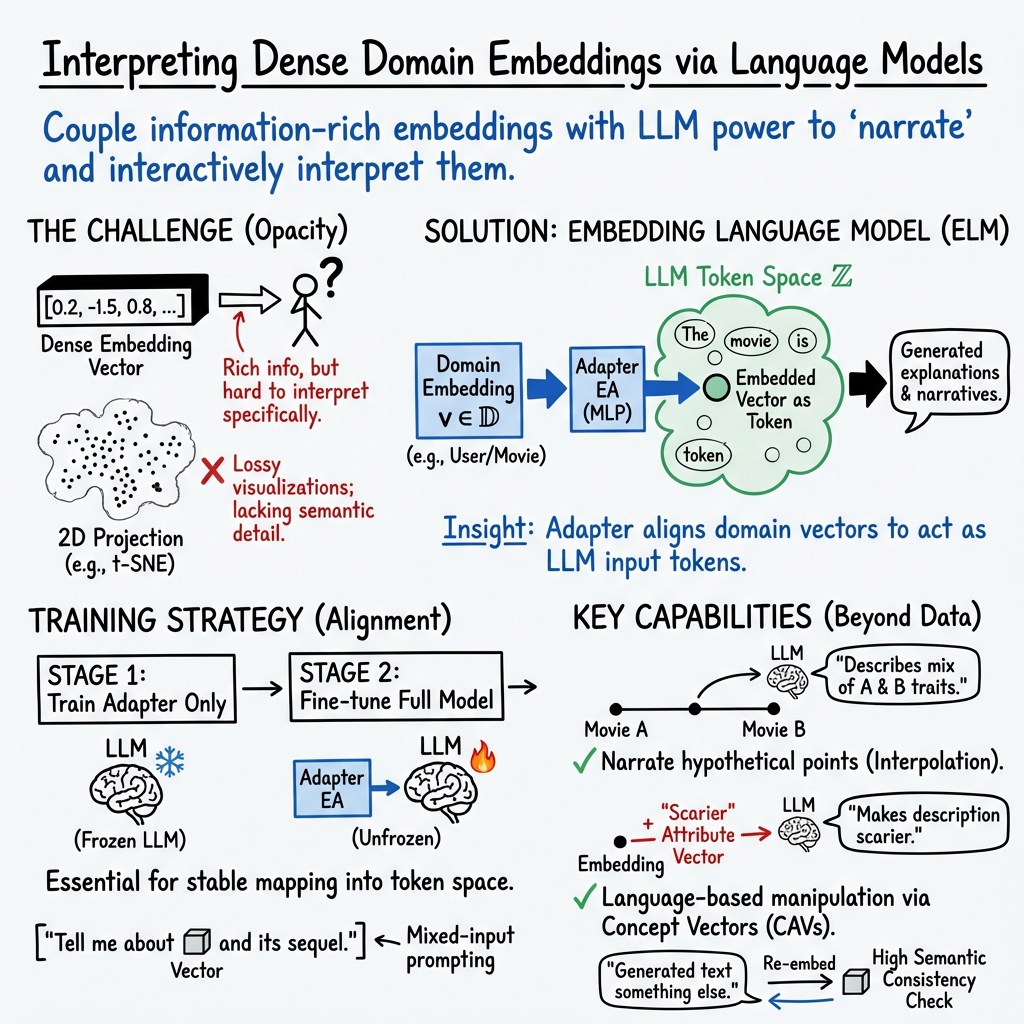

The paper "Demystifying Embedding Spaces using LLMs" presents a novel approach to enhancing the interpretability of embedding vectors by leveraging LLMs. The authors address a significant challenge in the field of machine learning: the interpretation of dense vector representations, or embeddings, which are commonly utilized to capture complex information about entities, concepts, and relationships across diverse domains.

Methodology

The paper introduces the Embedding LLM (ELM) framework, which allows LLMs to interact directly with domain-specific embeddings. This interaction is facilitated by training adapter layers that map domain embedding vectors into the token-embedding space of an LLM. By doing so, the ELM framework enables the LLM to treat these vectors as if they were text tokens and thus provide natural language descriptions and interpretations of these embeddings.

The authors propose a training procedure divided into two stages. Initially, the adapter layers are trained on tasks involving embedding representations while keeping the rest of the LLM fixed. In the second stage, the entire ELM model is fine-tuned, allowing it to produce coherent, interpretable output regarding the embeddings while tapping into the language-rich knowledge embedded within the LLM.

Experimental Evaluation

The experimental evaluation leverages the MovieLens 25M dataset to empirically validate the proposed framework. The study evaluates two types of embedding spaces: behavioral embeddings based on user ratings and semantic embeddings derived from movie descriptions. The authors design numerous tasks, including generating movie summaries, reviews, user profiles, and describing hypothetical entities within the embedding space.

Two new consistency metrics are introduced to measure the efficacy of the ELM: semantic consistency (SC) and behavioral consistency (BC). SC evaluates the alignment of the model’s text output with the original semantic embeddings, and BC assesses its ability to predict behaviors from the generated descriptions. These metrics are crucial because they allow quantitative assessment of the ELM’s ability to interpret abstract embedding vectors.

Results

Empirically, the ELM demonstrates robust generalization over a series of intricate tasks. It achieves high semantic and behavioral consistency, compellingly interacting with both existing and interpolated embeddings. Human evaluators consistently rated the ELM's outputs from various tasks, such as movie summaries and user profile descriptions, as both comprehensive and coherent.

Importantly, the model shows significant capability in handling embeddings of non-existent, hypothetical entities by interpolating between known data points. This contrasts with traditional LLMs, which lack the ability to interpret arbitrary, non-linguistic vectors without detailed prompt engineering.

Implications and Future Work

The potential applications of such a framework are vast. By bridging the gap between complex data representations and human-interpretable insights, the ELM framework offers promising advances in fields like natural language processing, recommender systems, and other domains reliant on embedding representations. The ability to seamlessly integrate with LLM architectures highlights the flexibility and scalability of this approach.

Future research could explore expanding the ELM framework to other LLM architectures or integrating more sophisticated adapter and embedding techniques. There is also scope to apply reinforcement learning paradigms to optimize the interpretive accuracy of ELM further. Additionally, extending ELMs to perform real-time adjustments or fine-tuning based on user interaction and feedback could provide dynamic and personalized experiences across applications.

In summary, the paper significantly contributes to the understanding and utilization of embedding spaces by proposing a method to translate these dense vectors into natural language, thereby making them accessible to human users. The innovative combination of LLMs with direct interaction models opens new pathways for embedding interpretability, presenting both theoretical and practical advancement opportunities in machine learning.