This paper introduces SPELL (Semantic Prompt Evolution based on a LLM), a black-box algorithm for automatically optimizing text-based prompts for natural language processing tasks using LLMs. The core idea is to leverage the text generation capabilities of an LLM within an evolutionary framework to create better-performing and coherent prompts for a fixed target model.

Methodology: SPELL Framework

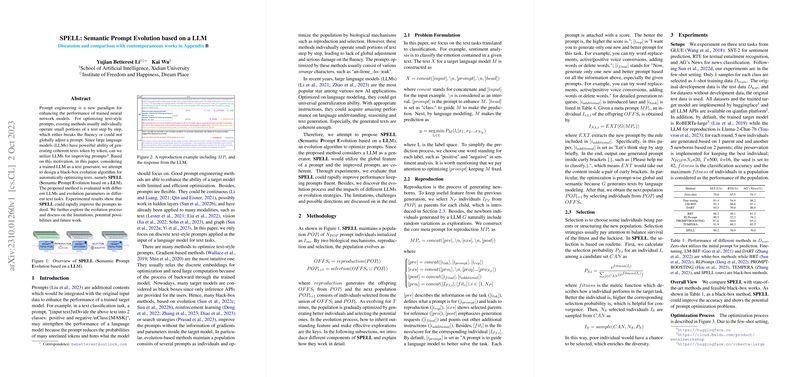

SPELL operates as an evolutionary algorithm maintaining a population of prompt candidates. It iteratively improves this population through two main steps: reproduction and selection.

- Reproduction:

- This step generates new prompt candidates (offspring) based on existing prompts (parents) from the current population.

- Parents are selected from the population (using the selection mechanism described below).

- A "meta-prompt" () is constructed to guide an LLM (referred to as ) in generating a new, improved prompt. This meta-prompt includes:

- Task description (

[i_{tsk}]). - Definition of a prompt and its goal (

[i_{prompt}]). - Instructions for reproduction, suggesting modification strategies like word replacement, voice conversion, adding/deleting words (

[i_{rep}]). - Examples of parent prompts (

[pro_i]) along with their fitness scores ([fit_i]). - Final generation request (

[i_{final}]). - Additional instructions, including a specific format for extracting the generated prompt (e.g., enclosed in curly braces

{}) ([i_{additional}]).

- Task description (

- The LLM () processes the meta-prompt and generates a response.

- An extraction function (

EXT) isolates the newly generated prompt from the LLM's response based on the specified format (e.g., text within{}). - This process relies on the LLM's ability to understand the context (task, parent prompts, scores) and generate a semantically coherent and potentially better prompt in a global, prompt-wise manner.

- Selection:

- This step chooses which individuals (prompts) survive to form the next generation's population and which are selected as parents for reproduction.

- It uses a roulette wheel selection mechanism based on fitness scores.

- The fitness of a prompt is typically its performance (e.g., accuracy) on a validation set ( in the paper's few-shot setting) when used with the target model .

- The selection probability for an individual prompt is calculated as , where is the set of candidate prompts (current population + offspring).

- Individuals are sampled based on these probabilities. This allows fitter individuals a higher chance of selection while still permitting less fit individuals a small chance, promoting diversity.

Experiments

- Setup: Experiments were conducted on SST-2, RTE, and AG's News tasks in a 16-shot setting. The target model was RoBERTa-large, and the default LLM for prompt generation was Llama-2-Chat-7b. The population size was 20, evolved over 500 rounds.

- Results:

- SPELL improved prompt performance over zero-shot baselines but showed lower test accuracy compared to several state-of-the-art white-box (e.g., Fine-tuning, LM-BFF, DART) and black-box (e.g., BBT, RLPrompt, TEMPERA) methods on the reported tasks (Table \ref{table-methods}).

- The optimization process showed convergence but exhibited significant fluctuations (instability) across different runs (Figure \ref{figure-process}).

- The generated prompts were observed to be coherent and semantically meaningful, unlike some character-level optimization methods. For example, "Classify the following sentence." evolved into "Can you determine the sentiment of this sentence for me?".

- The choice of LLM for generation significantly impacted performance. ERNIE-Bot and Llama2-Chat-13B performed better than Llama2-Chat-7B, while BLOOMZ failed to follow instructions (Table \ref{table_LLMs}).

- Ablation Studies:

- Using accuracy as the fitness metric worked better than using cross-entropy loss.

- Population size affects the exploration/exploitation balance.

- Larger training sets () generally led to better performance, likely due to more reliable fitness estimation.

Conclusion and Discussion

SPELL demonstrates a novel approach to prompt optimization by integrating an LLM's generative power into an evolutionary algorithm. It enables global, semantic-level prompt modifications, resulting in coherent prompts and relatively rapid optimization compared to some methods requiring thousands of rounds. However, the method shows instability, and its effectiveness is highly dependent on the capability of the LLM used for generation. The paper suggests future work could focus on adapting LLMs specifically for this task, stabilizing the optimization process, and extending the approach beyond text. The appendix notes that contemporaneous works like OPRO and EVOPROMPT achieved better results, potentially because they targeted LLMs as the end task model, which might be more amenable to prompts generated by other LLMs compared to smaller models like RoBERTa.