Data Filtering Networks: A Comprehensive Overview

The paper "Data Filtering Networks" introduces an innovative approach to enhancing dataset curation for large-scale machine learning models, with a particular application to image-text datasets used for training models like CLIP (Contrastive Language-Image Pre-Training). The authors present the concept and application of Data Filtering Networks (DFNs), neural networks designed specifically to filter large uncurated datasets to create high-quality training sets. This work establishes that the efficacy of a DFN extends beyond typical performance metrics like ImageNet accuracy, emphasizing the importance of data quality in training effective models.

Key Insights and Methodology

The paper makes several critical observations. Firstly, the authors assert that the performance of a network for filtering data is distinct from its downstream task performance. For example, a model that performs well on ImageNet may not necessarily be effective at filtering to create a high-quality training set. Conversely, a model trained on a smaller but higher-quality dataset can lead to superior training sets despite its seemingly lower performance on ImageNet. This insight challenges the conventional wisdom that better-performing models on standard benchmarks are inherently better data filters.

To build effective DFNs, the authors advocate for training on high-quality data. They demonstrate that even a small proportion of low-quality data can significantly degrade the performance of a DFN. They trained various models, including ResNet-34 and M3AE, and compared these with CLIP models to identify the best candidates for DFNs. Their findings indicate that CLIP models consistently outperform other architectures in the context of filtering performance.

Experimental Evaluation

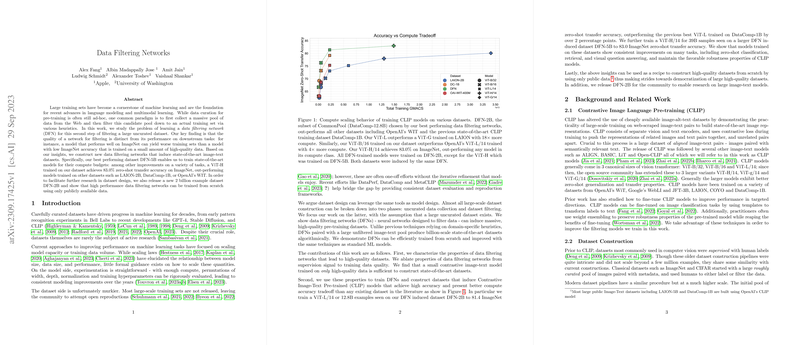

The authors conducted a thorough evaluation using the DataComp benchmark, which provides a structured framework for assessing dataset quality and model performance. The evaluation spans multiple scales, including medium (128M samples), large (1.28B samples), and xlarge (12.8B samples), with different model architectures (ViT-B/32, ViT-B/16, ViT-L/14) tailored to each scale.

The experiments show that DFNs trained on high-quality datasets like the High-Quality Image-Text Pairs (HQITP-350M) can induce datasets leading to state-of-the-art performance. For instance, a ViT-L/14 model trained on the DFN-2B dataset achieved 81.4% zero-shot transfer accuracy on ImageNet, outperforming previous datasets such as LAION-2B and OpenAI's WIT-400M.

Moreover, the authors extended their DFN to a larger pool of 42B images, inducing the DFN-5B dataset, and trained a ViT-H model to achieve 84.4% zero-shot transfer accuracy on ImageNet. This represents a significant improvement over other state-of-the-art models, including those trained on LAION-2B and OpenAI datasets, demonstrating the robustness and scalability of the DFN approach.

Implications and Future Directions

The research has profound implications for both practical and theoretical aspects of machine learning. Practically, DFNs can democratize the creation of high-quality datasets, enabling researchers and practitioners to build robust models without access to proprietary data. The ability to induce high-quality datasets from publicly available sources, as demonstrated with the training on Conceptual Captions and Shutterstock data, underscores the potential for widespread application.

Theoretically, this work opens new avenues for research in dataset design and optimization. The notion that data quality and model filtering performance are distinct metrics challenges existing paradigms and suggests that future research might focus on developing new proxies for dataset quality and exploring how these proxies can be generalized across different modalities, such as text, speech, and video.

Conclusion

"Data Filtering Networks" presents a compelling framework for enhancing dataset curation through specialized neural networks. By demonstrating that high-quality data is paramount in training effective DFNs, the authors provide a robust methodology for improving the performance and efficiency of large-scale machine learning models. This work not only advances the state-of-the-art in image-text models but also provides a scalable, reproducible approach to dataset creation with broad implications for future research and practical applications in AI.