Jointly Training Large Autoregressive Multimodal Models: An Expert Overview

The paper, "Jointly Training Large Autoregressive Multimodal Models," presents a significant advancement in the field of machine learning with the introduction of the Joint Autoregressive Mixture (JAM) framework. This research addresses a keenly felt gap in integrating text and image modalities within a unified, robust model capable of interleaved multimodal generation. The challenges involved in combining these distinct modalities are substantial, given the architectural and functional disparities between LLMs and autoregressive text-to-image models.

Core Contributions and Methodologies

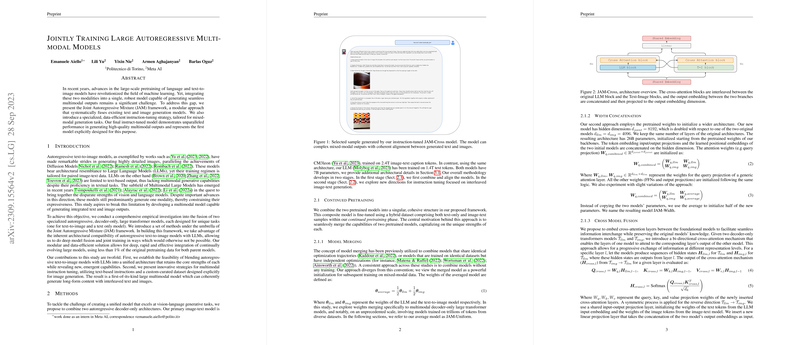

The authors introduce a novel framework that systematically fuses autoregressive models, leveraging architectural compatibility between LLMs and text-to-image models. The methodology employs three strategies for model fusion: weight averaging, width concatenation, and cross-model fusion—all encapsulated within the JAM framework. Each approach explores different mechanisms for integrating knowledge and capabilities from distinct model architectures.

- Weight Averaging (JAM-Uniform): This strategy attempts parameter averaging to retain both models' capabilities in a concise parameter space.

- Width Concatenation (JAM-Width): Here, the hidden dimensions of models are doubled by concatenating parameters to form a larger shared architecture.

- Cross Model Fusion (JAM-Cross): By introducing bidirectional cross-attention layers, this method facilitates progressive inter-layer knowledge exchange while maintaining each model's integrity.

Empirical Outcomes

The empirical investigation reveals that the JAM-Cross model exhibits superior performance among the proposed strategies, particularly in the image-text modality measured by MS-COCO perplexity. It outperforms both its foundational models and comparator models from existing literature in interleaved image-text generation tasks. The JAM-Cross model's success underlines the efficacy of cross-attention mechanisms in bridging model capabilities across modalities.

The paper highlights the remarkable efficiency of the continued pretraining phase, achieving extensive model integration using less than 1% of the original pretraining data. This approach not only ensures the retention of original performance capabilities but also unlocks emergent properties that neither of the parent models could exhibit independently.

Instruction Tuning for Multimodal Generation

An additional significant contribution is the introduction of a specialized, data-efficient instruction-tuning strategy focused on mixed-modal generation tasks. This involves using a custom-curated dataset to facilitate seamless text and image generation, demonstrating that small, curated datasets can efficiently instruct large multimodal models.

Implications and Future Directions

The findings of this research hold substantial implications for both practical applications and theoretical advancements in AI. The proposed framework paves the way for more sophisticated systems capable of multimodal interactions, enhancing user experiences in conversational AI. Practically, this could lead to applications in education, content creation, and interactive media, where seamless integration of text and imagery enhances the user experience.

Looking forward, the authors suggest scaling this approach to larger models and varying architectural asymmetries. There is potential to extend the context window and apply these methodologies to multi-turn conversational settings, broadening the practical applicability of these models.

Conclusion

The Joint Autoregressive Mixture framework represents a key advancement in creating robust multimodal models. By exploring efficient integration methodologies and innovative instruction tuning, this research contributes a significant step towards sophisticated AI systems capable of complex interactions across modalities. It opens avenues for further explorations in scaling, extending capabilities, and creating richer user interactions in diverse applications.