The Role of LLMs in Enhancing Fake News Detection: An Examination of LLMs as Advisors

LLMs have garnered significant attention for their remarkable proficiency across a diverse array of tasks. However, their specific applicability in domains requiring nuanced understanding, such as fake news detection, remains a topic of exploration. This essay reviews a paper that critically evaluates the role of LLMs in fake news detection and proposes a methodological framework that leverages the strengths of both LLMs and Small LLMs (SLMs).

Main Findings and Methodology

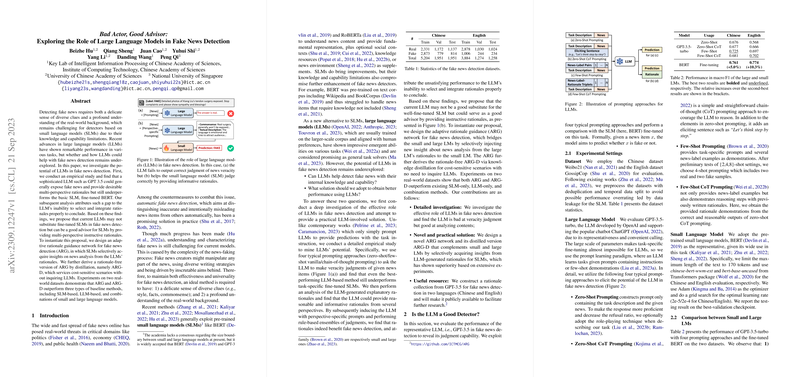

The paper starts by empirically examining whether LLMs like GPT-3.5 can effectively detect fake news. It contrasts the performance of LLMs across various prompting strategies with a fine-tuned SLM, particularly BERT. The investigation concludes that despite LLMs' prowess in reason generation and multi-perspective analysis, they fall short of outperforming SLMs in task-specific roles such as fake news detection.

Interestingly, the analysis attributes this underperformance to the LLMs' intrinsic limitation in synthesizing and utilizing rationales for final judgment effectively. Consequently, the LLMs, while incapable of surpassing SLMs in overall detection capability, can serve as valuable advisors. They provide insightful rationales that aid in the enhancement of the decision-making process of SLMs.

Based on these insights, the authors propose an Adaptive Rationale Guidance Network (ARG) which mediates the interaction between SLMs and LLMs. The ARG makes use of rationale inputs from LLMs to support SLM decisions in fake news detection. Furthermore, the authors introduce a distilled version, ARG-D, which encapsulates the rationale benefits in a model free from continuous LLM queries, thereby reducing operational costs in scenarios where resource efficiency is crucial.

Empirical Evaluation

The performance of the proposed models, ARG and ARG-D, demonstrates a noteworthy improvement over both standalone SLMs and conventional techniques that combine LLMs with SLMs. Evaluations on real-world datasets from Chinese and English sources substantiate these improvements, showcasing ARG's superior macro F1 scores, thereby validating its efficacy in harnessing rationale guidance from LLMs without directly relying on them for final judgments.

Implications and Future Directions

This research raises several implications for the future development of AI in tasks contingent on extensive real-world knowledge and nuanced reasoning. By establishing LLMs as advisors rather than sole decision-makers, there exists a potential paradigm where the complexities of intricate tasks are managed through collaborative model strategies rather than monolithic model dependencies.

For future research, developing advanced models to parse and refine the integration of multi-perspective rationales from LLMs may offer further improvements. Expanding the exploration to other forms of data and languages could also provide comprehensive insights towards universal applicability and efficiency.

Conclusion

The paper presents a compelling argument for leveraging large-scale LLM capabilities not as standalone replacements for domain-specific models but as insightful collaborators that enhance the effectiveness of small-scale, task-optimized models like BERT. By formulating a hybrid approach, it opens pathways for more robust and resource-efficient deployment strategies in AI applications concerning fake news detection and potentially other domains that require high-contextual understanding.

In sum, while LLMs have yet to fully supersede finely-tuned SLMs on task-specific grounds, their role as strategic aides in providing rationale and enhancing model interpretability marks an important development in computational approaches to artificial intelligence.