Enhancing Robotic Manipulation: Leveraging Physically Grounded Vision-LLMs

Introduction to Physical Concepts in Robotics

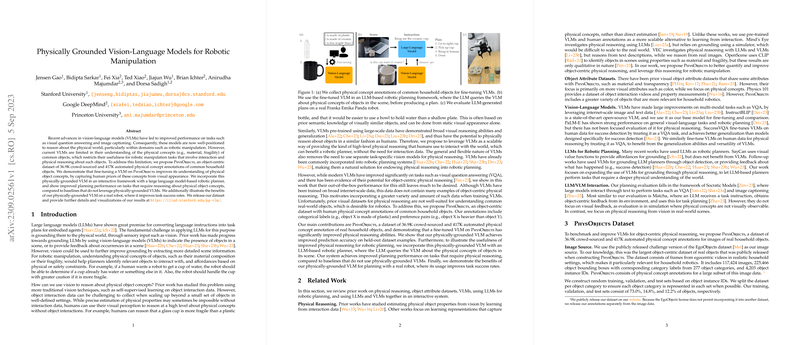

The integration of LLMs with robotic systems has opened new doors for improving task execution in terms of both efficiency and reliability. Linking the capabilities of LLMs with physical world understanding, primarily through Vision-LLMs (VLMs), has been a focal point for recent research endeavors. This paper discusses advancements in the field, spotlighting the development and utilization of PhysObjects - a comprehensive dataset designed to fine-tune VLMs for enhanced understanding and generalization of physical object concepts. This work addresses a critical gap in existing models' ability to reason about physical attributes, a necessity for executing nuanced robotic manipulation tasks in real-world settings.

Bridging the Gap with PhysObjects

The challenge of making robots understand and interact with the physical world involves attributing correct physical characteristics to objects, such as weight or material composition, based on visual cues. The proposed solution, PhysObjects, comprises a significant collection of physical concept annotations for common household items, aiming to bridge the gap between human-level understanding and robotic reasoning. This dataset includes:

- Crowd-sourced Annotations: To construct a diverse and realistic dataset, the research utilized crowd-sourcing to gather 39.6K annotations across various physical concepts for household objects.

- Automated Annotations: To supplement the crowd-sourced data, the team further generated 417K automated annotations, focusing on easily deducible physical attributes from visual analysis.

Key Contributions and Findings

- Dataset Creation: The formation of the PhysObjects dataset marks a significant stride toward enriching the physical reasoning capabilities of VLMs. By incorporating both manually annotated and automated physical concept labels, the dataset aims to cover a broad spectrum of everyday objects and their characteristics.

- VLM Performance Enhancement: Notably, fine-tuning VLMs on the PhysObjects dataset has shown remarkable improvements in physical reasoning capabilities, as evidenced by increased test accuracy, including on held-out concepts not directly trained on. This suggests the model's advanced ability to generalize from learned physical attributes to novel scenarios.

- Application in Robotic Planning: The integration of the physically grounded VLM within a robotic planning framework has demonstrated substantial enhancements in planning accuracy and task execution success rates. The real-world applicability of this research is further underscored through improved task performance in practical robot experiments.

Future Directions and Applications

Although notable advancements have been made, the exploration does not conclude here. The potential expansions include:

- Exploring Additional Physical Concepts: Future work might explore a broader range of physical concepts, possibly extending beyond the presently considered attributes, thereby enriching the dataset and the model's understanding even further.

- Integrating Geometric and Social Reasoning: The incorporation of geometric and social reasoning capabilities alongside physical understanding could be a valuable direction, aiming to create more holistic and context-aware robotic systems.

Concluding Remarks

In summary, the development of PhysObjects and its application in fine-tuning VLMs for robotic manipulation tasks represent important steps forward in the field of robotics and AI. By enhancing the physical reasoning capabilities of robots, this work paves the way for more nuanced and effective interactions with the tangible world, highlighting the continuous push towards achieving human-like understanding and flexibility in robotic systems.