An Overview of "Tiny LVLM-eHub: Early Multimodal Experiments with Bard"

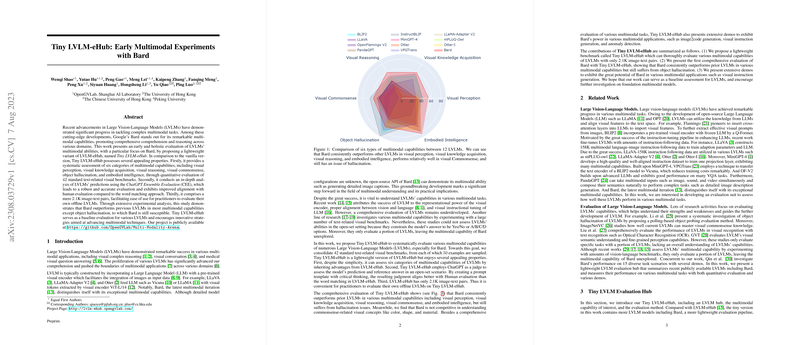

The paper presents a thorough evaluation framework, Tiny LVLM-eHub, designed to assess the capabilities of Large Vision-LLMs (LVLMs) with a focus on Google's Bard. This work systematically evaluates multimodal abilities across six categories: visual perception, visual knowledge acquisition, visual reasoning, visual commonsense, object hallucination, and embodied intelligence. The authors provide a quantitative analysis using 42 standard visual-text benchmarks.

Key Contributions and Methodology

- Evaluation Framework: Tiny LVLM-eHub is a streamlined variant of LVLM-eHub, facilitating a detailed assessment of various multimodal capabilities. Unlike its predecessors, it incorporates a more refined evaluation metric, ChatGPT Ensemble Evaluation (CEE), which aligns predictions better with human evaluations by considering open-set scenarios.

- Multimodal Capabilities: The framework categorizes the multimodal evaluation into:

- Visual Perception: Tasks like Image Classification and Object Counting.

- Visual Knowledge Acquisition: Includes OCR and Key Information Extraction (KIE).

- Visual Reasoning: Evaluation through Visual Question Answering (VQA) and Knowledge-Grounded Image Description (KGID).

- Visual Commonsense: Insights into generic visual concepts.

- Object Hallucination: Addressing common issues in LVLMs.

- Embodied Intelligence: Practical implications in virtual home environments.

- Quantitative Analysis: Bard consistently outperforms other LVLMs across most capabilities except for object hallucination. It demonstrates superior visual perception, knowledge acquisition, and reasoning. The paper highlights Bard’s weakness in visual commonsense related to color and shape, echoing findings in similar assessments.

Results and Implications

- Numerical Findings: Bard excels in visual perception and reasoning tasks, achieving notable accuracy improvements over other models, despite a susceptibility to object hallucination. However, the model falls short in certain nuanced visual commonsense tasks, suggesting areas for future improvement.

- Robustness of CEE Metric: The CEE evaluation provides a more reliable alignment with human judgment compared to traditional word matching approaches, underscoring the importance of sophisticated evaluation techniques in assessing LVLM outputs.

Practical and Theoretical Implications

From a theoretical standpoint, the paper provides a comprehensive methodology for evaluating LVLMs, highlighting the need for nuanced metrics that can handle the complexity of multimodal tasks. Practically, the insights gained from Bard's evaluation can guide future developments, emphasizing the importance of balancing advanced perception with commonsense knowledge.

Future Directions

Future research should continue to refine LVLM capabilities by addressing their limitations in commonsense understanding and hallucination. Moreover, exploring additional dimensions such as political bias, content safety, and fairness remains crucial. Bard’s performance opens avenues for leveraging its strengths in real-world applications such as automated data processing and interaction within embodied systems.

By introducing the Tiny LVLM-eHub, the authors contribute a significant tool for benchmarking and advancing multimodal models, facilitating a deeper understanding of their strengths and limitations in complex tasks.