Essay on "Do Multilingual LLMs Think Better in English?"

The paper "Do Multilingual LLMs Think Better in English?" presents a critical assessment of the translate-test approach typically utilized to enhance multilingual LLMs' performance. The authors propose an innovative methodology named self-translate, which leverages internal translation capabilities inherent in multilingual models to eliminate the dependency on external machine translation systems.

Methodology

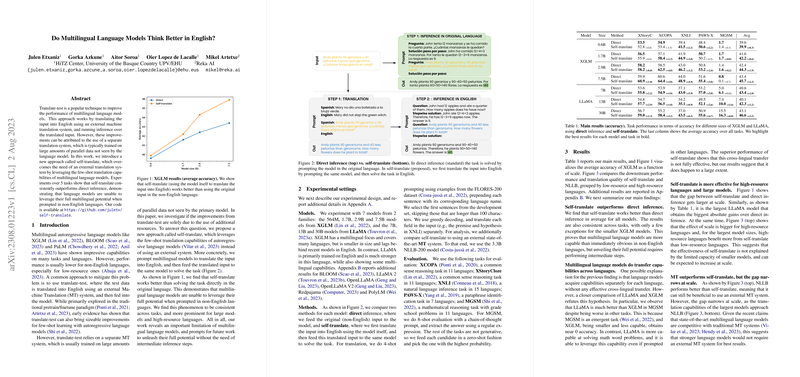

The self-translate approach introduced in this work is premised on the few-shot translation capabilities exhibited by multilingual autoregressive LLMs. This method is presented as an alternative to translate-test, which traditionally requires an external system. The self-translate technique prompts the LLM to first translate the input data into English before executing the task. This proposal is meticulously evaluated across five tasks to gauge its efficacy relative to direct inference from non-English inputs.

Experimental Setup

The experiments involve several state-of-the-art models such as XGLM, BLOOM, and LLaMA, and are conducted across diverse tasks, including XCOPA, XStoryCloze, XNLI, PAWS-X, and MGSM. Each method's performance, including traditional direct inference, self-translate, and machine translation (using NLLB), is analyzed. The experimentation demonstrates the consistent superiority of self-translate over direct inference in multilingual contexts.

Results

The numerical results indicate a significant improvement in average accuracy when employing the self-translate approach over traditional direct inference. Notably, larger models showed more substantial gains, suggesting that model capacity plays a role in the effectiveness of internal translation capabilities. The paper reveals that models indeed possess underutilized potential when directly engaging with non-English inputs without intermediate translation.

Implications

The findings of this research carry deeply relevant theoretical and practical implications. From a theoretical standpoint, the paper elucidates an intrinsic limitation within current multilingual models when operating in non-English languages. Practically, this insight suggests a pivot towards refining models to internally manage multilingual data more effectively without relying on translation systems, potentially streamlining multilingual processing tasks. Such advancements are poised to foster more efficient cross-lingual applications in AI, enhancing accessibility and user experience across linguistic boundaries.

Conclusion

In summation, the proposed self-translate approach surfaces vital insights into the untapped capacities of multilingual models. By effectively bridging the performance gap observed in direct non-English inference, this methodology not only showcases potential pathways for optimizing existing approaches but also inspires future exploration aimed at refining models' native multilingual capabilities. This paper prompts further inquiries around expanding models' inherent abilities to optimally process multilingual data without the necessitation of translation dependencies.