A Unified Model for Diverse Multimodal Tasks

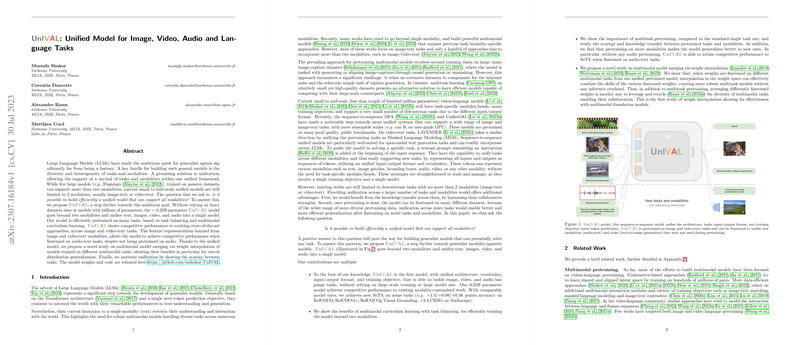

This paper proposes a unified model designed to simultaneously address tasks across multiple modalities, including image, video, audio, and text. This ambitious goal seeks to streamline the extensive diversity and heterogeneity inherent in multimodal tasks, typically supported by distinct models. This work contributes to the burgeoning field of unified models, particularly focusing on scalability and efficiency.

Model Architecture and Methodology

The model employs a sequence-to-sequence neural architecture specifically designed to handle the representation and transformation of different modalities into a unified token-based input format. Notably, it leverages a relatively moderate model size of approximately 0.25 billion parameters, significantly smaller than many existing multi-billion parameter models in the field. This reduction in size is achieved without sacrificing the model’s ability to handle multiple modalities, an important consideration for resource-constrained environments.

Key to the architecture is the use of a linear connection layer for tokenizing non-textual data, such as images and audio. This technique engenders efficient mapping into the shared input space of a pretrained LLM, which forms the core of the system’s processing capability. The model is trained using a next-token prediction objective, thereby allowing it to both understand and generate coherent language-based outputs across diverse tasks.

Performance Evaluation

The paper presents the competitive performance of the model across a suite of standard benchmarks. In tasks such as Visual Grounding, the model achieves state-of-the-art results, notably in the RefCOCO, RefCOCO+, and RefCOCOg datasets. For more traditional language-related evaluations like VQAv2 (Visual Question Answering) and Image Captioning on the MSCOCO dataset, the model exhibits commendable efficacy, rivaling or often outperforming existing approaches that require larger training datasets.

Innovative Contributions

Among the novel contributions of this paper is the demonstration of multimodal curriculum learning, which provides a systematic pathway for efficiently incorporating multiple modalities into the training regimen. This strategy involves incrementally introducing additional modalities to the training process so that the model does not burden computational resources with the requirement to handle all data at once. This approach not only curtails computational costs but also, as demonstrated in the results, can enhance model generalization to new or less familiar modalities.

Moreover, this paper addresses the potential of downstream task adaptation via a weight interpolation strategy, showcasing the ability to merge expertise from models fine-tuned on different tasks. This feature highlights the model’s versatility and capability for seamless task transfer, a critical aspect for developing adaptable AI systems capable of real-time learning and adaptation.

Implications and Future Directions

The implications of this research extend both practically and theoretically. Practically, it informs the development of generalist agents capable of performing diverse multimodal tasks—an encouraging step towards more sophisticated and versatile AI applications. Theoretically, it sparks discourse on the trade-offs between model size, efficiency, and performance, emphasizing the viability of streamlined models for robust task execution.

Future research directions suggested by the paper include scaling the model size while advancing the unification strategy to accommodate more complex data interactions and tasks. Additionally, reducing hallucinations and improving handling of complex instructions remain important challenges. Exploring more training techniques and curriculum strategies may further bolster generalization capabilities, especially in anticipated scenarios involving new or unobserved modalities.

In sum, this paper significantly advances the quest for a unified multimodal model, offering substantial insights into efficient training, effective integration of diverse data types, and dynamic task adaptability. It serves as a competent model for both academic inquiry and real-world application development in the domain of AI systems.