Counterfactual Simulatability: Evaluating Explanations in LLMs

The paper "Do Models Explain Themselves? Counterfactual Simulatability of Natural Language Explanations" examines the extent to which LLMs like GPT-3.5 and GPT-4 can provide explanations that help humans build mental models predicting the model's behavior across counterfactual scenarios. This paper is significant in advancing our understanding of AI explainability, particularly in contexts where human users interact with AI systems.

Methodological Framework

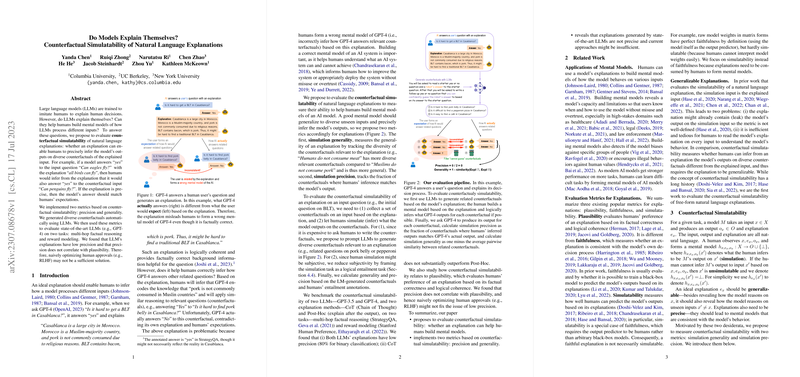

The authors introduce two key metrics to assess the counterfactual simulatability of LLM-generated explanations: simulation generality and simulation precision. Simulation generality refers to how diverse counterfactuals an explanation can be applied to, while simulation precision measures the consistency between the humans' inferred outputs based on the explanation and the model's actual outputs across these counterfactuals. Through automated LLM techniques, counterfactual scenarios are generated for initial model outputs and explanations.

To ascertain the efficacy of these metrics, the paper evaluates LLM-generated explanations across two tasks: multi-hop factual reasoning using StrategyQA and reward modeling with Stanford Human Preference datasets. Two explanation frameworks, Chain-of-Thought (CoT) and Post-Hoc, are juxtaposed, the former generating explanations prior to model output decision, posited as more aligned with the model's decision-making process.

Key Findings

- Explanatory Precision: Both CoT and Post-Hoc explanation methods show that the explanatory precision of model outputs is deficient. Particularly, the explanations provided often fail to match the inferred expectations set by humans when tasked with counterfactual simulations. Notably, the research indicates that CoT does not outperform Post-Hoc in a statistically significant manner, which is contrary to initial expectations that CoT would yield higher precision due to its process alignment.

- Generality versus Precision: The paper highlights a lack of correlation between simulation generality and precision, suggesting that the ability of an LLM explanation to generalize across diverse counterfactuals does not necessarily indicate higher precision in matching anticipated outputs with actual outputs.

- LLM Scaling and Precision: With GPT-4, explanations were observed to yield better alignment with human mental model predictions than those from GPT-3.5, indicating a potential improvement in counterfactual simulatability through scale.

- Dissonance with Human Preferences: A critical insight is that simulation precision does not correlate with plausibility, as previously perceived in human evaluations. Therefore, optimizing LLM behavior based on human approval (e.g., using Reinforcement Learning from Human Feedback, RLHF) might not increase the precision of its explanations.

Implications and Future Directions

The implications of this research are multifold. Technically, it urges an improvement in LLM architectures and training paradigms that could more robustly interlink reasoning paths with output predictions. Practically, industries utilizing AI systems, particularly in explainable AI-required fields like healthcare and justice, must rethink current approbation-based methods (like RLHF) for models tasked with high-stakes decision-making.

For further research, extending these metrics to generation tasks predicates interest, given their broader applicability in nuanced domains where model outputs are not limited to binary outcomes. Additionally, the prospect of building mental models via iterative human-AI interactions could present avenues for achieving better counterfactual simulatability in systems deployed in dynamic, real-world environments.

In conclusion, the paper provides critical insights that challenge existing paradigms in AI explainability, arguing against an over-reliance on current methods of gaining human approval and urging for a deeper investigation into how explanations are formulated and received. This expands our horizon on model interpretability and usability, crucial for ethical and efficient AI integration into various domains.